Your organization has a rulebook for managing its data assets, but does it have one for managing the decisions those assets produce?

This is the central question in the AI governance vs data governance discussion. While closely related, they are not interchangeable.

Data governance focuses on the quality, security, and lifecycle of your information. AI governance extends that oversight to the algorithms and models themselves, addressing complex issues like fairness, transparency, and accountability.

Treating them as the same function creates dangerous blind spots. This guide will clarify their distinct roles and show you how to integrate them into a unified strategy for end-to-end risk management.

Key Takeaways

- Develop Data Governance and AI Governance in tandem: While data is foundational to AI, both governance programs should be developed in parallel. As AI adoption increases, it introduces new data types and risks, which in turn shape data governance priorities. Likewise, evolving data governance requirements must be incorporated into AI governance to ensure consistent oversight.

- Understand Their Distinct Roles to Manage Risk: Data governance manages the raw materials—your data assets. AI governance oversees the finished product—the models’ ethical use, fairness, and performance. Differentiating their functions is critical for assigning clear ownership and addressing specific risks.

- Build a Unified, Automated Framework: Move from policy to practice by creating a single operational playbook for both data and AI. This requires clear roles, cross-functional collaboration, and using technology to automate essential tasks like risk monitoring and compliance checks.

What Is AI Governance?

Let’s start with a clear definition. AI governance is the comprehensive structure your organization puts in place to direct and control the development, deployment, and ongoing management of artificial intelligence.

Think of it as the operational rulebook that guides every AI initiative, from internal tools to complex vendor models. It’s not about slowing things down; it’s about creating the guardrails that allow you to accelerate AI adoption with confidence.

A solid governance plan provides the clarity and control needed to manage risks, comply with regulations, and build trust with stakeholders, setting a clear path for responsible and effective AI use across your enterprise.

Define the Core Components

At its heart, an AI governance framework is built on a few key pillars. It establishes the formal policies that dictate acceptable AI use, the practices for assessing and mitigating risks, and the processes for maintaining compliance with legal and regulatory standards.

This includes creating clear lines of accountability – who owns the risk for a specific AI model? It also demands transparency in how AI systems make decisions, especially in high-stakes environments like finance or human resources. These components work together to create a system that is both resilient and ready for scale, giving your teams the structure they need to operate effectively.

Address the Ethical Imperatives

Beyond the operational rules, AI governance directly confronts the ethical questions that come with this technology. This isn’t a box-ticking exercise; it’s about proactively embedding risk management, accountability, and transparency into the entire AI lifecycle, from initial research to final deployment.

For any organization, but especially those in regulated fields, building a responsible AI framework is fundamental. It’s the mechanism you use to actively prevent biases from influencing hiring decisions or financial assessments. By addressing these ethical imperatives head-on, you protect your customers, your employees, and your brand’s reputation from the significant risks of irresponsible AI.

Learn more about what an AI Governance Platform can offer you

Explore what an AI governance platform offers you. Learn more: https://fairnow.ai/platform/

What Is Data Governance?

Before we can effectively compare AI governance and data governance, it’s critical to have a solid understanding of the latter.

Think of data governance as the comprehensive rulebook for your organization’s entire data landscape. It’s a foundational framework of processes, policies, and standards that dictates how all data – not just data used for AI – is collected, stored, managed, and used.

The primary objective is to create a single source of truth, ensuring your data is accurate, consistent, and secure across the board. This framework isn’t just about control; it’s about creating value.

When executed properly, a strong data governance program enables your teams to trust the data they use for everything from routine reporting to strategic decision-making. It provides the stable ground upon which more specialized initiatives, including AI governance, can be successfully built. Without it, you’re operating on a shaky foundation, where data quality is questionable and compliance risks are high.

The Foundational Principles of Data Governance

At its core, data governance is about establishing clear ownership and accountability for your data assets. It answers critical questions: Who has permission to access certain data? How is data quality maintained? What are the procedures for data storage and disposal?

The principles of data governance guide how your company handles its information lifecycle, from initial collection to final archiving. This ensures that the data feeding your analytics and AI models is both accurate and reliable. Good data governance is a prerequisite for good AI because even the most sophisticated algorithm will produce flawed results if it’s trained on inconsistent or inaccurate data.

Uphold Data Quality and Security

A key function of data governance is to protect sensitive information and ensure your organization complies with legal and regulatory requirements. This involves implementing robust security measures to prevent data breaches and safeguard user privacy, aligning with regulations like GDPR and CCPA.

By setting clear standards for data quality and security, you create an environment where data is not only protected but also consistently reliable and fit for its intended purpose. This focus on compliance and quality confirms that data is a trustworthy asset that can be used confidently to drive business operations and power your AI systems.

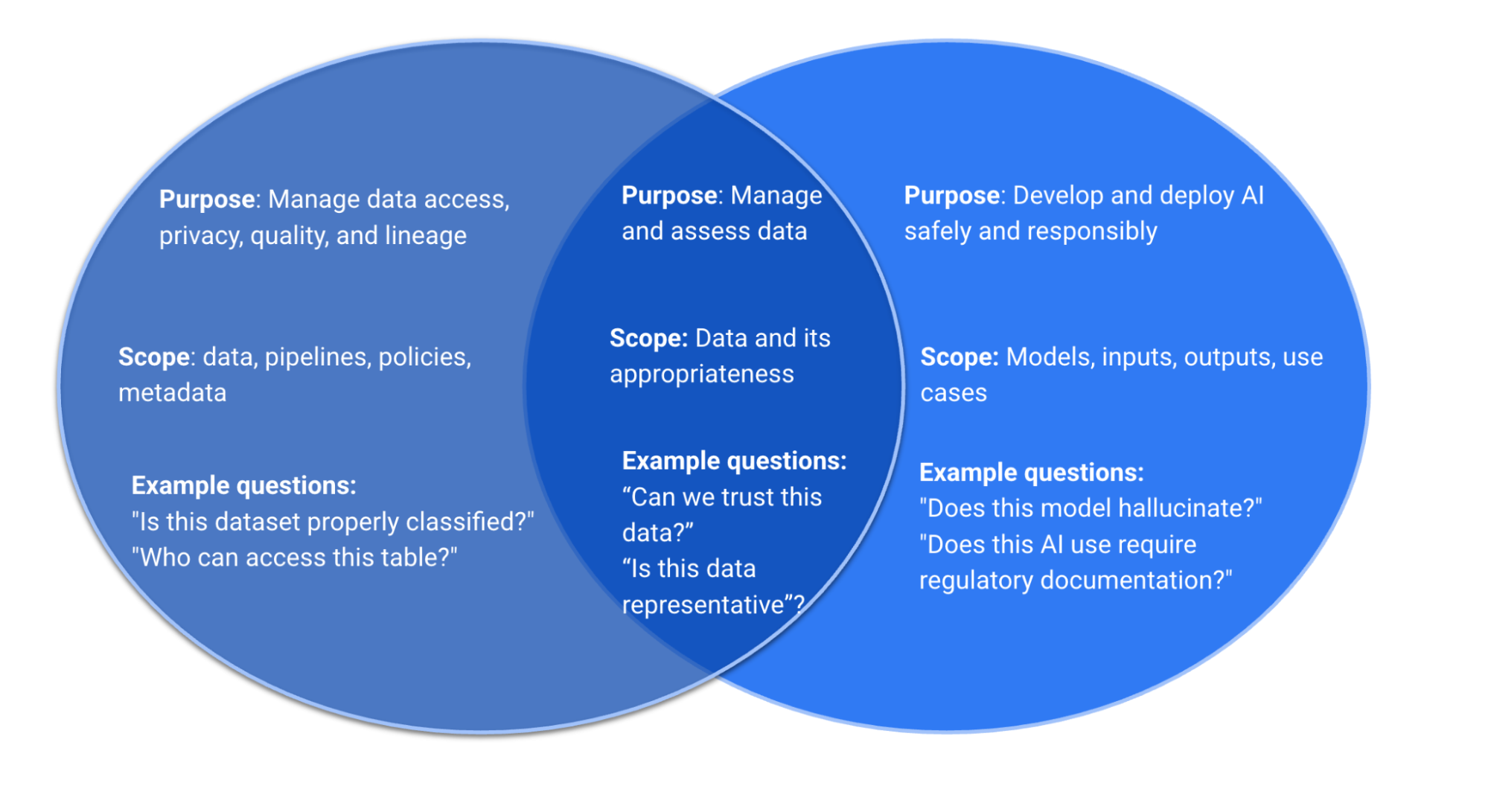

AI vs. Data Governance: The Key Distinctions

While AI and data governance are closely related, they aren’t interchangeable.

Data governance is the bedrock of responsible AI – but AI governance builds the full architecture of accountability, layering in model oversight, risk management, and ethical controls. Data governance manages the raw materials – your data – making sure it’s solid and reliable.

AI governance, on the other hand, oversees how those materials are used to construct intelligent systems, making sure the final product is safe, fair, and effective.

Understanding the specific roles each plays is the first step toward building a comprehensive strategy that allows you to scale AI with confidence. Distinguishing between them helps you assign the right resources and create policies that address the unique challenges of each domain.

Compare Their Focus and Scope

At its core, data governance is about control and quality over your organization’s data assets. It establishes the rules for how you collect, store, protect, transfer, and use data, making sure it remains accurate, consistent, and secure throughout its lifecycle.

It answers questions like: Where did this data come from? Who has access to it? Is it fit for use? Strong data governance is the bedrock of any data-driven operation.

AI governance complements data governance by adding oversight for models and automated decision-making. It builds on data governance to include the policies and practices that guide the responsible use of artificial intelligence. Its focus shifts from the data itself to the models and systems that use that data to make predictions or decisions.

An AI governance framework addresses ethical compliance, algorithmic fairness, model transparency, and accountability—all critical for managing the unique risks AI introduces.

Dive deeper into understanding how AI Governance differs from related domains: Read more in AI Governance, Explained

Contrast the Regulatory Landscapes

The rules governing data are relatively mature and well-defined. Regulations like GDPR and CCPA have set clear standards for data privacy and protection, focusing on how personal information is handled.

Organizations have had years to adapt to these requirements, building compliance functions around consent management and data breach notifications. The primary goal is to protect the data subject’s information from misuse.

In contrast, the regulatory landscape for AI is still taking shape, creating a more fluid and forward-looking compliance challenge. New laws like the EU AI Act and NYC’s Local Law 144 are less about the data itself and more about the impact of AI-driven decisions. They target issues like algorithmic bias, risk management, transparency, and accountability. This requires a proactive stance, as failing to keep up can lead to significant financial and reputational damage.

Differentiate Risk Management Approaches

Risk management in data governance centers on protecting the data asset. Your main concerns are preventing data breaches, correcting inaccuracies, maintaining data availability, and complying with privacy laws.

The risks are tangible and often tied to specific datasets or systems. You can mitigate them with clear access controls, encryption, and data quality checks.

AI risk management is typically more dynamic and complex. It includes many of the risks of data governance and adds several new layers.

You have to account for ethical risks, like an HR tool developing a bias against certain candidates. There are also performance risks, such as a model’s accuracy degrading over time, and legal risks tied to the accountability of automated decisions.

Managing these requires a continuous, holistic approach that monitors AI systems for fairness, transparency, and unintended consequences throughout their entire lifecycle.

How AI and Data Governance Work Together

AI governance and data governance aren’t competing priorities; they are deeply interconnected partners. Thinking of them as separate functions is a common misstep.

A strong AI governance framework is only possible when it’s built upon an equally strong data governance foundation. When you align these two disciplines, you create a comprehensive system that manages risk from the moment data is collected to the final output of an AI model. This synergy is what allows your organization to scale AI with confidence and integrity.

Why Data Is the Bedrock of AI

Think of your data as the foundation of a skyscraper. You wouldn’t build a complex structure on unstable ground, and the same logic applies to AI.

Data quality and reliability is fundamental to AI performance. This is why effective AI governance must build upon the principles of data governance.

Without a solid framework for managing data quality, security, and lineage, your AI initiatives are exposed to significant risks from the start. Good data governance isn’t just a preliminary step; it’s the essential, ongoing practice that supports every AI model you deploy.

Align Your Governance Strategies

While data governance and AI governance are connected, they address different parts of the lifecycle. Data governance is focused on the accuracy, consistency, and security of your data assets. AI governance, on the other hand, centers on the ethical, transparent, and compliant use of the AI systems built from that data.

To be effective, these two strategies must be aligned. They need to work in concert to create a seamless chain of accountability. This alignment is critical for using your high-quality, curated data responsibly within AI models, meeting both internal standards and external regulatory requirements.

Identify Shared Goals and Hurdles

The intersection of AI and data governance is where you’ll find shared objectives and challenges. Both disciplines are fundamentally concerned with data quality, security, and privacy.

A primary shared hurdle is managing bias. Since biased data creates biased AI, a weakness in your data governance will directly undermine the fairness and performance of your AI models. This makes it critical to establish continuous monitoring across both frameworks.

By identifying these shared goals, you can create more efficient, integrated processes that address risks holistically, rather than in isolated silos.

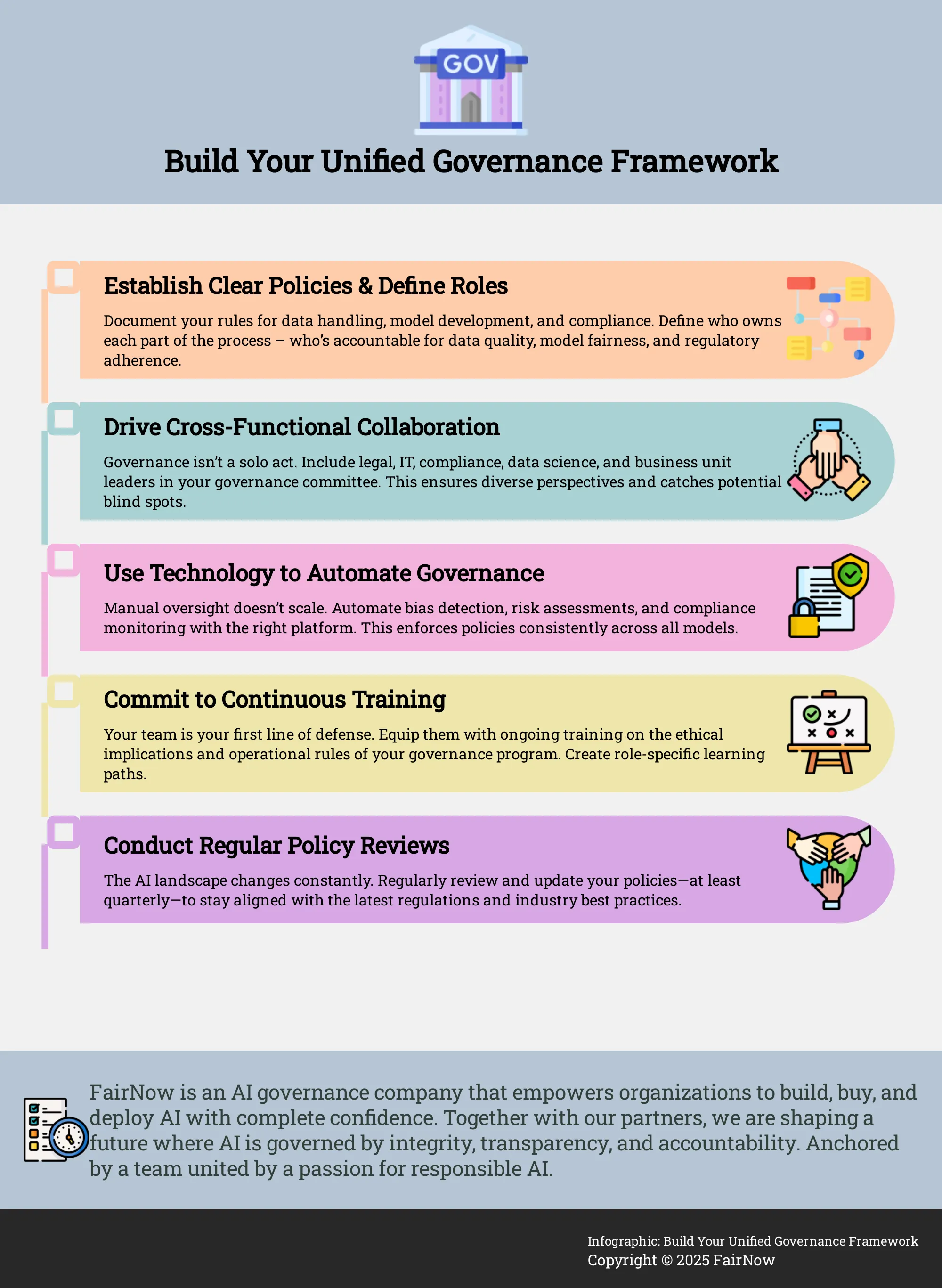

Build Your Unified Governance Framework

Creating a unified governance framework is how you move from theory to practice. It’s the operational blueprint that merges your data and AI oversight into a single, cohesive strategy. Instead of running two separate playbooks, you create one that ensures your data is sound and your AI is responsible from the ground up.

This approach doesn’t just mitigate risk; it builds a solid foundation that allows your organization to scale its AI initiatives with confidence and control. A strong framework provides the structure needed to align your technology with your business goals and ethical standards.

Establish Clear Policies and Define Roles

Your first step is to document everything. A successful governance framework is grounded in clear, well-defined policies that minimize ambiguity and support consistent interpretation and implementation.

This means defining the rules for data handling, model development, ethical reviews, and compliance checks. Think of this as creating the official rulebook for how your organization interacts with data and AI.

Just as important is assigning ownership. Vague responsibility is the same as no responsibility. You need to clearly define roles, outlining who is accountable for data quality, who validates model outputs for risks, and who oversees regulatory adherence.

An AI governance framework establishes these lines of authority, ensuring every task has a designated owner who is answerable for its execution and outcomes.

Drive Cross-Functional Collaboration

Governance can’t operate in a silo. Because AI and data impact every corner of your organization, you need a variety of perspectives to manage them effectively.

Your governance committee should include leaders from legal, compliance, IT, data science, and the specific business units using the AI tools. This creates a collaborative network where different teams can share insights and identify potential blind spots.

For example, your legal team can assess regulatory risks while your data scientists evaluate model performance and your HR team considers the impact on employees. This cross-functional collaboration ensures that your governance strategy is comprehensive, practical, and aligned with the realities of your business operations.

When everyone has a seat at the table, you build a more resilient and responsible AI ecosystem.

Use Technology to Automate Governance

As your organization adopts more AI tools, manual oversight becomes impossible. You can’t rely on spreadsheets and email chains to manage the complex risks associated with dozens or even hundreds of models.

This is where technology becomes essential for turning your policies into automated practice. The right platform can help you operationalize your framework by automating critical tasks like bias detection, risk assessments, and compliance monitoring.

AI governance tools provide a centralized system to enforce your policies consistently across all internal and third-party models. By using technology to automate governance, you can effectively track your entire AI inventory, respond to issues faster, and maintain a continuous state of compliance without slowing down your teams.

Overcome Common Integration Challenges

Bringing data and AI governance together is more than a technical exercise; it’s a strategic imperative that comes with its own set of hurdles.

As you work to create a unified framework, you’ll face challenges that test your policies, processes, and people. Addressing these issues head-on is the key to building a resilient governance structure that supports responsible AI adoption across your enterprise.

The most common obstacles include protecting data, managing risk without hindering progress, and staying current with changing laws.

Confront Data Privacy and Bias Risks

Your AI systems are a direct reflection of the data they’re trained on. If that data is compromised by privacy gaps or inherent biases, your AI models will perpetuate and even amplify those flaws, exposing your organization to significant ethical and financial risks.

The most effective way to manage this is by implementing robust AI governance tools and frameworks from the start. Strong governance enforces automated data policies, ensuring that AI systems operate with high-quality, validated datasets. This approach allows you to uphold ethical AI principles and build systems that are fair, transparent, and trustworthy by design, rather than trying to correct for bias after deployment.

Balance AI Adoption with Risk Management

Many teams fear that governance will create bureaucratic red tape that slows down development. In reality, the opposite is true.

A well-defined governance structure acts as a guardrail, not a gate, giving your teams the confidence to build and deploy AI solutions securely. An effective AI governance framework establishes clear policies and practices that guide the responsible development and use of artificial intelligence.

By embedding risk management, data transparency, and accountability into your workflows, you create a secure environment for your teams to operate within. This clarity removes ambiguity and empowers them to move forward with their initiatives, knowing they are aligned with organizational standards and regulatory requirements.

Adapt to Evolving Regulations

The legal landscape for artificial intelligence is in constant motion. New laws and reporting requirements are emerging globally, and what is compliant today may not be tomorrow.

Attempting to manage this shifting environment with manual processes and static checklists is inefficient and risky. Organizations that fail to implement strong, adaptable AI governance frameworks risk falling behind legally and competitively, facing potential fines and reputational harm.

The solution is a dynamic governance model that can be updated quickly as regulations change. Automating compliance monitoring and risk tracking allows you to stay ahead of new requirements and demonstrate due diligence to regulators with confidence.

Best Practices for Unified Governance

Commit to Continuous Training

A unified governance framework is a powerful tool, but its success depends entirely on the people who use it. Your teams are the first line of defense in managing AI and data risks, which means they need to be equipped with the right knowledge.

This goes beyond a one-time onboarding session. As AI technology and its applications grow, so do the complexities. Ongoing training is essential for helping your staff understand the ethical implications and operational rules of your governance program.

Create role-specific learning paths. A data scientist needs deep technical training on bias detection, while a marketing manager needs to understand the rules for using AI in customer-facing campaigns. By committing to continuous education, you empower every employee to become a proactive steward of responsible AI.

Conduct Regular Policy Reviews

The world of AI and data regulation is constantly shifting. A policy that was compliant six months ago might expose you to risk today. Adopting a “set it and forget it” mindset toward your governance policies is not an option.

To maintain control and protect your organization, you must treat your framework as a living document that requires consistent attention and updates. Schedule regular policy reviews—at least quarterly or semi-annually—to ensure your AI governance frameworks align with the latest legal requirements and industry standards. These reviews should be a cross-functional effort, bringing together leaders from legal, compliance, IT, and key business units.

This proactive approach allows you to address gaps before they become critical problems and keeps your organization prepared for what’s next.

Measure Your Governance Effectiveness

You can’t effectively manage what you don’t measure. A unified governance framework needs clear metrics to prove its value and guide improvements. Simply having policies in place isn’t enough; you need to know if they are working.

This means moving beyond simple compliance checklists and toward a quantitative understanding of your risk posture and operational efficiency. Define key performance indicators (KPIs) that reflect your governance goals. These might include the percentage of AI models audited for fairness, the time it takes to remediate identified risks, or the number of data access requests fulfilled within policy.

Regularly tracking these metrics provides concrete evidence of your program’s effectiveness and highlights areas needing attention. This data-driven approach to governance gives you the confidence to scale AI adoption responsibly while monitoring for biases and other potential harms.

Related Articles

Learn more about what an AI Governance Platform can offer you

Explore what an AI governance platform offers you. Learn more: https://fairnow.ai/platform/

AI Governance vs Data Governance FAQs

We already have a strong data governance program. Isn't that enough to manage our AI risks?

Think of it this way: your data governance program makes sure you have high-quality, secure ingredients for a recipe. That’s a critical first step, but it doesn’t guarantee the final dish will be safe or effective. AI governance oversees the cooking process itself—how the models are built, tested, and used. It addresses risks that data governance doesn’t, like algorithmic bias, a model’s performance degrading over time, or the need for transparency in automated decisions. A solid data foundation is essential, but it’s not a substitute for governing the AI systems you build on top of it.

Is it ever too early to establish an AI governance framework?

No. In fact, building your ai governance framework as you begin adopting AI is far more effective than trying to bolt one on after dozens of models are already in use. You don’t need a massive, complex system on day one. Start by defining clear policies for a single use case. Establish who is accountable for the model’s performance and fairness. By integrating governance from the start, you create a culture of responsibility and build a scalable structure that grows with your AI initiatives, preventing costly and complex clean-up projects down the road.

What's the most critical first step to building a unified governance framework?

Your first and most important step is to establish clear ownership. Before you write a single policy, you must define who is accountable for what. This means creating a cross-functional committee with leaders from legal, IT, compliance, and the business units using the AI. Then, for each AI system, assign specific individuals who are responsible for its entire lifecycle, from data inputs to performance monitoring. Without clear lines of accountability, even the best-written policies will fail to be enforced.

How can we manage AI governance without slowing our teams down?

This is a common concern, but it’s based on a misunderstanding of what good governance does. A well-designed framework acts as a set of guardrails, not a series of gates. It provides your teams with clear rules of the road, which removes ambiguity and empowers them to act with confidence and speed. When your data scientists and developers know exactly what the standards are for fairness, transparency, and security, they don’t have to second-guess their work. Automating tasks like risk assessments and compliance checks further accelerates the process, making governance a seamless part of the workflow, not a bottleneck.

Our AI models are from third-party vendors. How does a unified governance framework apply to them?

Your governance framework is even more critical when dealing with third-party models because you have less direct control over how they were built. The framework provides the structure for managing these external systems. It dictates your vendor vetting process, defines the contractual requirements for model transparency and audit rights, and establishes the process for continuously monitoring the vendor’s model for performance and bias. Your internal framework is what allows you to hold external partners accountable and manage the risks they introduce into your organization.