What Is the EU AI Act? A Detailed Guide to EU AI Act Compliance

The World’s First Comprehensive AI Law

Introduces A Risk-Based Approach

Stringent Requirements & Severe Penalties

National & EU Oversight

Stay Informed: EU AI Act

Enter your email to stay informed and on track in real time.

FAQs About The EU AI Act

Steps to Achieve Compliance with the EU AI Act

High-Level Summary

What are the core concepts of the EU AI Act?

1) The World’s First Comprehensive AI Law

- The EU AI Act establishes a detailed legal framework to ensure that AI systems deployed within the EU are safe, ethically sound, and respect fundamental rights such as privacy, non-discrimination, and individual autonomy.

- The framework is designed to be comprehensive, addressing not only the technical aspects but also the ethical and societal implications of AI.

- The EU AI Act is poised to be the world’s first comprehensive AI law, setting stringent standards for AI systems.

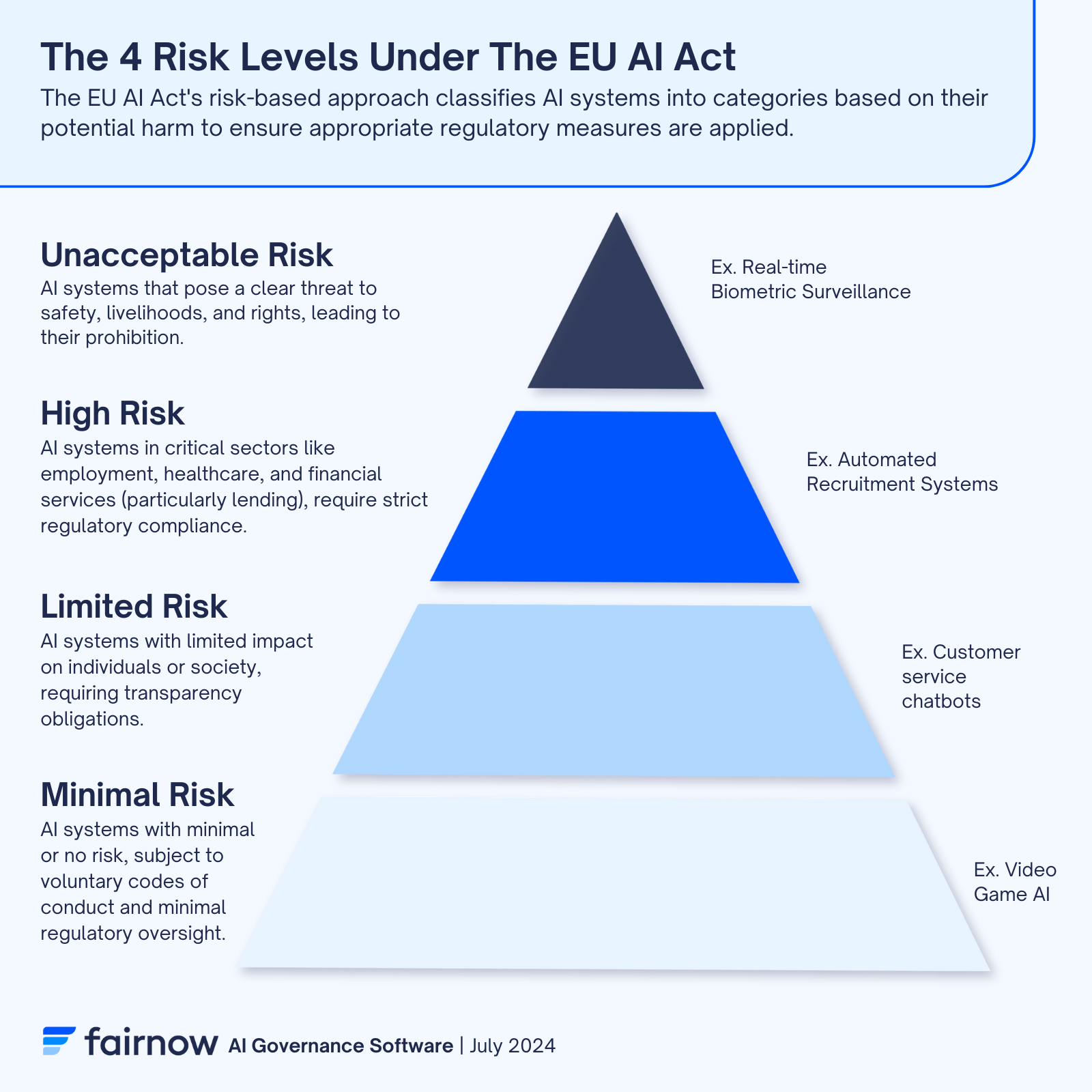

2) Creation Of A Risk-Based Approach

- The Act introduces a risk-based categorization of AI systems to regulate them based on their potential impact on individuals and society.

- This approach ensures that high-risk AI applications are subject to rigorous scrutiny, while lower-risk applications can thrive with minimal regulatory burden, fostering innovation and trust in AI.

- The AI Act risk categories are: Unacceptable Risk (Prohibited), High Risk, Limited Risk, and Minimal Risk.

3) Strict And Significant Penalties For Non-Compliance

- The high financial penalties underscore the importance of compliance.

- For the most serious breaches, such as using prohibited AI practices, the maximum penalty is the greater of €35 million or 7% of the company’s total worldwide annual turnover.

- For less severe violations, such as failing to meet transparency or documentation requirements for high-risk AI systems, fines can reach up to €15 million or 3% of the total worldwide annual turnover.

4) Creation Of Distinct Stakeholder Groups With Distinct Responsibilities

- Providers

- Organizations that develop, supply, or market AI systems or models under their own brand.

- Responsible for ensuring that AI systems comply with the EU AI Act’s regulations, including safety, transparency, and documentation requirements.

- Required to ensure AI literacy among their own staff

- Deployers

- Entities that use AI systems within the EU for various applications.

- Must adhere to transparency obligations, manage AI-related risks, and ensure that users are informed when interacting with AI systems.

- Required to ensure AI literacy among their own staff

- Importers

- Organizations established in the EU that bring AI systems into the EU market from non-EU providers.

- Ensure that imported AI systems comply with the EU AI Act, verify provider obligations, maintain conformity documentation, and ensure proper labeling and instructions.

- Distributors

- Entities in the supply chain that make AI systems available on the EU market, excluding providers and importers.

- Verify the conformity of AI systems with EU regulations, maintain necessary documentation, keep records of AI systems, and report non-compliance or risks to national authorities.

EU AI Act Scope

Who does the EU AI Act apply to?

Under the EU AI Act, compliance is required for the following entities:

- Providers: Entities that develop, market, or deploy AI systems within the EU.

- Users: Entities using AI systems in the EU, particularly those utilizing high-risk AI systems.

- Importers: Entities that import AI systems into the EU market.

- Distributors: Entities that distribute or sell AI systems within the EU.

- Third-Party Evaluators: Entities that conduct conformity assessments of AI systems.

- Non-EU Providers: Providers established outside the EU if their AI systems are used in the EU market or affect people within the EU.

These stakeholders must adhere to the regulatory requirements based on the risk level of the AI systems they handle.

Compliance Requirements

What are the compliance requirements of the EU AI Act?

The EU AI Act sets forth specific compliance requirements for AI systems based on their risk classification. Here’s a summary of the key compliance requirements for each risk category:

All Risk Categories

Starting on February 2, 2025, providers and deployers of AI systems (no matter the risk level) must ensure that their staff or others using an AI system on their behalf have a sufficient level of AI literacy given their roles, technical knowledge, experience, education, training, the context in which the AI systems are used, and the subjects of that use. Although the exact nature of this requirement will vary widely from organization to organization, the European AI Office has published the “Living Repository of AI Literacy Practices” with examples from over a dozen organizations to “encourage learning and exchange.”

Unacceptable Risk

Starting on February 2, 2025, AI systems classified under this category are banned and cannot be placed on the market or put into service within the EU. Examples of prohibited uses include social scoring, biometric identification and classification of individuals, or cognitive behavioral manipulation that exploits the vulnerabilities such as age or disability.

High Risk

- Risk Management: Implement a risk management system to identify, analyze, and mitigate risks throughout the AI system’s lifecycle.

- Data and Data Governance: Ensure that training, validation, and testing datasets are high-quality, relevant, representative, and free of biases.

- Technical Documentation: Maintain detailed technical documentation providing information on the AI system, its design, development, and functioning.

- Transparency and Information Provision: Provide users with clear and understandable information about the AI system’s capabilities, limitations, and appropriate use.

- Human Oversight: Implement appropriate measures to enable effective human oversight to prevent or minimize risks.

- Accuracy, Robustness, and Cybersecurity: Ensure high levels of accuracy, robustness, and cybersecurity for the AI system.

- Conformity Assessment: Conduct conformity assessments before placing the AI system on the market, involving third-party evaluations for some systems.

- Registration: Register high-risk AI systems in the EU’s dedicated database before deployment.

Limited Risk

Transparency Obligations: Ensure that users know they are interacting with an AI system (e.g., chatbots must disclose they are AI).

Minimal Risk

Voluntary Codes of Conduct: Entities are encouraged to adhere to voluntary codes of conduct to promote best practices, though there are no mandatory requirements.

These requirements aim to ensure that AI systems deployed in the EU are safe, transparent, and respect fundamental rights.

Penalties and Fines

What are the non-compliance penalties for the EU AI Act?

The EU AI Act outlines significant penalties for non-compliance to ensure adherence to its regulations.

The penalties are designed to be stringent to deter violations and ensure accountability. Here are the key penalties:

1. For placing on the market, putting into service, or using AI systems that pose an unacceptable risk:

Fines up to €35 million or 7% of the total worldwide annual turnover of the preceding financial year, whichever is higher.

2. For non-compliance with the requirements related to high risk and limited risk AI systems, such as data quality, technical documentation, transparency obligations, human oversight, and robustness:

Fines up to €15 million or 3% of the total worldwide annual turnover of the preceding financial year, whichever is higher.

3. For providing incorrect, incomplete, or misleading information to notified bodies and national competent authorities:

Fines up to €7.5 million or 1% of the total worldwide annual turnover of the preceding financial year, whichever is higher.

These penalties aim to ensure that all entities involved in the development, deployment, and use of AI systems within the EU adhere to the strict requirements and standards set forth by the AI Act, promoting safe and trustworthy AI.

Timeline for Enforcement

When does the EU AI Act go into effect?

Enforcement of the Act is in stages, beginning when the Act enters into force on August 1st, 2024.

“General applicability” of the EU AI Act is considered to be August 2nd, 2026, although there are certain provisions applying as early as February 2025.

- February 2nd, 2025 (after six months): The requirement that providers and deployers ensure the “AI literacy” of their staff, as well as the ban on prohibited or “unacceptable risk” AI applications, go into effect.

- August 2nd, 2025 (after 12 months): The requirements for general-purpose AI systems (which includes generative AI models) become applicable

- August 2nd, 2026 (after 24 months): All rules of the AI Act become applicable, including obligations for high-risk systems defined in Annex III. This deadline is significant as it encompasses a wide range of high-risk systems, including, but not limited to:

- HR Technology: Systems such as those used to source, screen, rank, and interview job candidates.

- Financial Services: Systems used for credit scoring, anti-money laundering (AML), and fraud detection.

- Insurance Underwriting: Systems such as those used for calculating premiums, assessing risks, and determining coverage.

- Education and Vocational Training: Systems determining access to educational opportunities, personalized learning plans, and performance assessments.

- Many more are outlined in Annex III.

- August 2nd, 2027 (after 36 months): Obligations will take effect for high-risk AI systems not listed in Annex III but designed to serve as a safety component of a product. More on that here.

EU AI Act Readiness

How can organizations prepare for the EU AI Act?

1) Check If Your AI Is In Scope

Wondering if your AI is in scope for the EU AI Act? As a starting point you can use FairNow’s 2-Minute AI Compliance Checker to screen your system against the EU AI Act and 10 other critical regulations in the US and EU.*

*Disclaimer: This tool is for informational purposes only and does not constitute legal advice. The regulations and laws mentioned may change over time, and new regulations may not be captured. It is important to consult with a legal professional to ensure compliance with current requirements. FairNow is not responsible for any actions taken based on the results of the compliance checker.

2) Build Your Model Inventory

- Assemble a cross-functional team to catalog all of your AI tools and models (yup, all of them!)

- Detail each system’s functionality and capacity

- Identify the team(s) or individual(s) responsible for each model

- FairNow has an AI Inventory feature to make this process streamlined and painless

3) Identify High Risk Models

- Identify the risk level of each AI system according to the EU AI Act’s classifications: unacceptable risk, high risk, limited risk, or minimal risk

- Make note of general-purpose AI systems (which include generative AI models), as those requirements will be implemented 12 short months after enforcement of the Act

- Sort risks by severity to prioritize urgent issues

4) Implement AI Governance

- Maintain detailed documentation of each AI system’s lifecycle: purpose, capabilities, and decision-making processes

- Perform regular monitoring of your AI systems for bias, explainability, and reliability

- Ensure human oversight measures

AI Compliance Tools

Simplify EU AI Act compliance with FairNow’s AI Governance Platform

FairNow’s AI Governance Platform is tailored to tackle the unique challenges of AI risk management.

- Streamlined compliance processes, reducing reporting times

- Centralized AI inventory management with intelligent risk assessment

- Clear accountability frameworks and human oversight integration

- Ongoing testing and monitoring tools, including the ability to automate bias audits

- Efficient regulation tracking and comprehensive compliance documentation

FairNow enables organizations to ensure transparency, reliability, and unbiased AI usage, all while simplifying their compliance journey.

Experience how our industry-informed platform can simplify AI governance.

AI compliance doesn't have to be so complicated.

Use FairNow's AI governance platform to:

Effortlessly ensure your AI is in harmony with both current and upcoming regulations

Ensure that your AI is fair and reliable using our proprietary testing suite