Trust is the currency of modern business, and it’s an asset that can be quickly eroded by the misuse of AI. When customers, employees, and regulators view your AI systems as opaque “black boxes,” their confidence falters. A Responsible AI Policy is your organization’s public and internal declaration that you are committed to using this technology ethically and transparently. It establishes clear rules for data privacy, fairness, and human oversight, providing the assurance stakeholders need. By formalizing your approach, you move from simply using AI to leading with it, building a reputation for integrity that becomes a true competitive advantage in the market.

Key Takeaways

- Define Your Rules of Engagement with AI: A formal Responsible AI Policy translates abstract ethical goals into clear, enforceable rules that manage risk, protect your reputation, and align your entire organization on the safe and effective use and development of AI

- Build Your Policy Around Actionable Pillars: A strong policy is built on a practical framework. It must include specific standards for accountability, clear protocols for algorithm testing, defined requirements for human oversight, and a mandate for regular model monitoring to be truly effective.

- Treat Your Policy as a Living Document: Your work isn’t done at launch. An effective policy requires ongoing commitment through regular reviews, continuous team training, and staying current with new regulations to maintain its relevance and authority over time.

Get your free Responsible AI Policy template.

What is a Responsible AI Policy?

A Responsible AI Policy is your organization’s official rulebook for using artificial intelligence. It’s a formal document that defines the requirements and prohibitions for how your company will develop, deploy, and manage AI systems. The primary goal is to guide your teams in using AI ethically and safely, aligning every application with your company’s values, industry standards, and legal obligations. This policy acts as the foundation for all your AI governance efforts, setting clear, consistent expectations for everyone involved.

At its heart, a Responsible AI Policy is about building trust by turning abstract principles into concrete actions. It translates concepts like fairness and transparency into operational guidelines that your teams can follow. By creating a responsible and ethical AI framework, you establish a clear commitment to accountability and proactive risk management. For regulated industries, this is non-negotiable. A strong policy helps you address critical risks, such as algorithmic bias in hiring tools or toxic outputs from generative AI models, before they create legal or reputational damage.

AI governance is inherently cross-functional. This policy isn’t just for your data science team; it provides guardrails for anyone in your organization who interacts with AI. It creates a shared language for discussing ethics and risk, bridging the gap between technical, legal, and business units. From the C-suite setting the strategy to the employees using AI-powered tools, everyone understands their role in upholding responsible practices.

The Core Principles of Responsible AI

A Responsible AI Policy is a commitment built on core principles that act as your north star for every AI initiative. These principles help you create systems that are not only effective but also safe, trustworthy, and ethical. They are the foundational pillars that support your entire AI governance structure, ensuring that as you scale your AI use, you do so with integrity and foresight. Let’s break down what these pillars are.

Fairness and Non-Discrimination

At its heart, AI should serve everyone equitably. But bias in data or algorithms can lead to unfair and discriminatory outcomes, creating significant legal and reputational risks, especially in areas like hiring and lending. Your policy must champion fairness by demanding impartial treatment for all individuals. This means actively working to mitigate bias in your AI systems. You can achieve this by using diverse and representative datasets for training, employing algorithms designed for fairness, and conducting regular audits to identify and correct any emerging biases before they cause harm.

Accountability and Governance

When an AI system makes a critical decision, who is responsible for the outcome? A lack of clear ownership is a major pitfall. Accountability means establishing who is answerable for the AI’s development, deployment, and performance. This is especially important when considering third-party AI models, where responsibility over the AI’s lifecycle is split between your organization and a vendor. Strong governance is the framework that makes this possible, defining roles, responsibilities, and oversight processes. Organizations that establish clear accountability not only avoid public backlash and data breaches but also build trust with customers. Your policy should clearly map out these lines of responsibility, ensuring someone is always at the helm to guide the AI and answer for its actions.

Transparency and Explainability

Trust is impossible without understanding. Transparency requires you to be open about how your AI systems function, what data they use, and what their limitations are. But transparency alone isn’t enough. AI used in high-stakes outcomes like hiring and credit requires explainability – the ability to articulate why an AI model made a specific decision in plain language. This is crucial for debugging, gaining user acceptance, and meeting regulatory requirements. Your policy should mandate clear documentation and the use of Explainable AI (XAI) techniques. This allows your teams, your customers, and regulators to look inside the “black box” and understand the logic behind automated decisions.

Privacy and Data Protection

AI systems are data-hungry, but that appetite comes with a profound responsibility. Every piece of data you collect and use, especially personal information, must be handled carefully. Your AI policy must enforce strict data protection and privacy standards that go beyond basic compliance. This involves securing sensitive information against breaches and ensuring you are protecting individual privacy at every stage of the AI lifecycle, from data collection to model deployment and retirement. By embedding privacy-preserving techniques into your AI development process, you safeguard your customers’ data and fortify their trust in your organization.

While many organizations address privacy and data protection in similar but distinct policies, these are still risks of many AI systems. Specifically addressing this as part of your Responsible AI Policy reinforces the need to consider this risk within the context of AI-specific risks like data leakage.

Why Your Organization Needs a Responsible AI Policy

Implementing a Responsible AI Policy is not just about checking a compliance box; it’s a strategic move that builds a strong foundation for your company’s future with AI. Think of it as the blueprint for how your organization will create, deploy, and manage AI systems. Without clear guidelines, you open the door to serious risks that can affect your operations, your reputation, and your bottom line. A formal policy provides the structure needed to use AI confidently and effectively, turning a powerful technology into a reliable asset. It aligns your entire team on a shared set of principles, ensuring that as you scale your AI initiatives, you do so in a way that is controlled, ethical, and aligned with your core business values. This proactive stance is what separates leaders from followers in the age of AI.

Manage Ethical Risks

When you deploy AI without clear ethical guardrails, you’re taking a significant gamble. The technology can inadvertently lead to biased decisions, data privacy breaches, and outcomes that harm your customers and erode trust. For example, the COMPAS algorithm used in the U.S. justice system was found to exhibit racial bias in risk assessments, while a hiring tool developed by Amazon was scrapped after it consistently downgraded resumes from women. A Responsible AI policy is your primary tool for managing these ethical risks. It establishes firm rules for how AI systems should be built and used, ensuring they align with human values and principles of fairness. By creating a framework for responsible AI governance, you can address potential pitfalls before they become crises. This commitment not only prevents public backlash but also helps you build AI systems that truly serve their intended purpose, attracting top talent and loyal customers who value integrity.

Protect Your Business Reputation

Your company’s reputation is one of its most valuable assets, and a single AI misstep can cause lasting damage. Public perception is shaped by how a company handles powerful technologies, and customers are quick to lose faith in organizations that seem careless or unethical. A Responsible AI Policy is a clear statement of your commitment to using AI thoughtfully. It addresses common fears, like job displacement or algorithmic bias, head-on and shows your employees and customers that you’re in control. Proactively managing the challenges of adopting AI is essential for maintaining a positive public image and building the deep-seated trust that underpins a strong brand.

Meet Regulatory and Legal Demands

The legal landscape for artificial intelligence is changing rapidly. New rules, like the EU AI Act, are setting firm requirements for how organizations must govern their AI systems. Additionally, voluntary standards like ISO 42001 are gaining steam as frameworks organizations can adopt to demonstrate their commitment to AI governance.

For businesses in regulated industries like finance and HR, compliance isn’t optional. A Responsible AI Policy is the first step toward meeting today’s legal demands and those that will come in the future. It provides a documented framework for how your organization minimizes bias, protects data, and ensures accountability. Having this policy in place helps you stay ahead of new regulations and avoid the frantic scramble to catch up. Leading organizations already have the foundations in place to meet compliance requirements, demonstrating to regulators and stakeholders that they are managing AI risk responsibly.

What to Include in Your Responsible AI Policy

A Responsible AI Policy is a blueprint for action. It translates high-level principles like fairness and transparency into concrete rules and processes that guide your teams every day. When you’re ready to build your policy, it should be structured around four key pillars that cover the entire AI lifecycle, from the data you use to the way you monitor models in production. A comprehensive policy gives your teams the clarity they need to build and deploy AI with confidence. It establishes clear ownership, sets expectations for every stage of development, and creates a system of checks and balances to keep your AI initiatives on track. By defining these components, you create a clear path for your organization to follow, turning abstract ethical goals into tangible operational controls.

Data Governance and Quality Standards

Your AI models are only as good as the data they’re trained on. If your data is flawed, your AI’s outputs will be, too. Many organizations face challenges with inconsistent formats and poor data governance, which can lead to biased or inaccurate AI models. Your policy must establish strict standards for data quality and management. This section should define the rules for how data is collected, stored, processed, and protected. It needs to detail requirements for data accuracy, completeness, and relevance, creating a solid foundation for building fair and reliable AI systems. This is the first and most critical step in mitigating downstream risks.

Algorithm Design and Testing Protocols

This is where you define the rules of the road for building and deploying AI. Your policy must require that all AI systems are designed and tested according to responsible AI principles. This means establishing clear protocols for every stage of the model lifecycle, from initial design to pre-deployment validation. Your policy should mandate fairness assessments, bias testing, and performance evaluations against predefined benchmarks. It’s also critical to require thorough documentation for every model, detailing its purpose, limitations, and testing results. These rules and requirements for AI governance create a repeatable, auditable process that holds every project to the same high standard.

Human Oversight and Intervention

AI should empower your team, not operate as a black box. Effective human oversight is essential for building trust and accountability. Your policy must specify where and when human intervention is required. This includes explaining workflows for approvals and reviews before releasing or updating an AI system, defining clear processes for reviewing and validating high-stakes AI recommendations, establishing an appeals process for individuals affected by an AI decision, and having a plan to override or shut down a model if it behaves unexpectedly. By creating these guardrails, you can avoid potential pitfalls like biased decisions and public backlash. This approach helps you build trust with customers and create AI systems that serve their intended purpose.

Monitoring and Improvement

Launching an AI model is the beginning, not the end. Models can drift over time as they encounter new data, leading to degraded performance or emerging risks. Your policy must mandate regular monitoring of all AI systems in production. This section should outline a schedule for regular performance audits, fairness checks, and risk assessments. It should also create a feedback loop for collecting input from users and stakeholders to identify issues and drive improvements. An ongoing commitment to monitoring keeps your AI effective, fair, and aligned with your business goals.

Common Challenges of AI Policy Implementation, and How to Overcome Them

Creating a responsible AI policy is a critical first step, but the real test comes during implementation. Putting principles into practice across a large organization introduces a new set of hurdles that can easily derail even the best-laid plans. From technical complexities hidden deep within algorithms to the very human factors of resistance and misunderstanding, these challenges can stall progress if you aren’t prepared. Anticipating these common obstacles is key to building a resilient strategy that turns your policy from a document into a daily operational reality.

Successfully meeting these challenges is about more than just avoiding risk. Organizations that get this right will not only sidestep potential pitfalls like data breaches, biased decisions, and public backlash, but they also build deep trust with customers and attract top talent. They position themselves to create AI systems that truly serve their intended purpose, driving real value for the business and its stakeholders. Understanding these hurdles isn’t about being pessimistic; it’s about being prepared to lead with confidence in an AI-driven future. This section breaks down the most common implementation challenges you’ll face and provides the perspective needed to address them head-on.

Reframe AI Risk Management as a Strategic Mindset

Managing AI risk requires a fundamental shift in how your organization thinks about innovation, accountability, and long-term success. Traditional risk frameworks can sometimes assume a static environment, but AI introduces dynamic, adaptive systems that demand a more proactive and continuous approach. Your staff involved in building and operating AI systems may not be in the habit of regularly thinking about the risks that the technology poses.

Solving this means moving beyond reactive compliance and adopting a mindset that treats risk as an integral part of AI strategy. Bolting on controls after deployment isn’t sufficient; risk thinking must be embedded from the start. You can achieve this through thoughtful design, testing, and monitoring. Embracing this mindset shift helps organizations build more resilient AI systems and fosters a culture of responsibility that keeps pace with rapid technological change.

Balance Progress with Ethical Guardrails

Organizations often feel a tension between the push to adopt AI quickly and the need to do so responsibly. Moving too fast without proper oversight can introduce unacceptable risks, while being overly cautious can mean losing a competitive edge. A helpful analogy is riding a bike: going too fast risks losing control, but going too slow means you don’t have the momentum to stay upright. In the same way, organizations must strike a balance between rapid AI adoption and responsible oversight.

The solution isn’t to choose one over the other. Instead, the goal is to establish ethical guardrails that enable confident progress. Effective AI risk management is not about control for its own sake; it’s a strategic driver that allows your teams to build and deploy AI solutions within a safe and compliant framework. This balance is what separates sustainable AI adoption from high-risk experimentation.

Bridge the AI Skills Gap with Cross-Functional Collaboration

A policy is only as effective as the people who implement it, and many organizations face a significant AI skills gap. This isn’t just about a shortage of data scientists or machine learning engineers. It’s also about a lack of AI literacy across the business. Employees in legal, HR, and compliance roles need to understand the technology to oversee it effectively. A common challenge of adopting AI is the fear of job displacement or employee resistance, which can hinder adoption.

To overcome this, you must invest in comprehensive training that bridges the skills gap and empowers your entire team to use and govern AI responsibly. Ensure that staff of different backgrounds are talking to each other – technical teams need to understand the social and legal context of their AI; similarly, legal and compliance teams need to understand the technology itself to properly govern it.

Invest in the Right Tooling for your AI Governance Program

Operationalizing your Responsible AI Policy out of a spreadsheet may work for a while, but it won’t scale for an organization with multiple AI systems and complex governance needs.

This is where AI GRC tools can help. Such tools are specifically designed to enable effective AI governance.

There is no one-size-fits-all tool for AI governance. Consider factors such as the complexity of your AI systems, the regulatory expectations you face, and the scale and maturity of your organization. Also think about technical factors like what integrations they provide, what sorts of testing they enable, and how much configuration they offer. Understanding these factors will help you select the right tool based on your organization’s profile.

How to Train Your Team for Responsible AI

A Responsible AI Policy is only as effective as the team implementing it. Once your framework is in place, the next critical step is to equip your employees with the knowledge and skills to apply it daily. Training transforms your policy from a static document into a living, breathing part of your company culture. Doing so will help you create an ongoing educational program that empowers your team to use AI confidently and ethically, turning principles into practice across the organization.

Teach AI Ethics and Compliance

Your training program should start with the fundamentals. Every employee who interacts with AI, whether they’re building models or using AI-powered software, needs a solid grounding in AI ethics and compliance. This training should clearly explain your organization’s policies on fairness, data privacy, and accountability. Use real-world case studies to illustrate potential pitfalls, like biased outcomes or AI failures that lead to negative business impacts. The goal is to make sure your team understands not just the rules, but the reasoning behind them. This foundational knowledge is essential for making sound decisions and upholding your company’s commitment to responsible AI practices.

Develop Skills for Responsible AI Use

Beyond understanding the rules, your team needs the practical skills to apply them. This means training them to critically assess AI systems and their outputs. For example, teach employees how to spot potential signs of algorithmic bias or how to use explainable AI (XAI) tools to understand why a model made a particular decision. It’s also vital to establish clear protocols for when human oversight is required and how to escalate concerns. By developing these skills, you empower your team to be proactive guardians of your AI policy, rather than just passive users of the technology.

Adapt to New AI Technologies

The field of AI is anything but static. New tools and capabilities emerge constantly, which means the risks are evolving as well. Your training program must be designed to keep current with the latest technology. Establish a rhythm of continuous learning with regular updates on the latest AI advancements and any changes to your internal policies or external regulations. If updated agentic AI capabilities pose new risks, make sure those are accounted for in your policy and training plan. Discussing recent AI-related events in the news can provide valuable, timely lessons. An adaptable training strategy ensures your team remains prepared for what’s next, helping you build trust with customers and maintain your leadership in responsible AI adoption. This proactive approach keeps your organization resilient and ready for the future.

How to Maintain an Effective AI Policy

Creating your Responsible AI Policy is a major milestone, but the work doesn’t stop there. AI technology, regulations, and business applications are constantly shifting, and your policy must adapt to remain relevant and effective. Maintaining your policy is an active, ongoing process that protects your organization from risk and reinforces your commitment to ethical AI. It requires a structured approach centered on three key activities: conducting regular reviews, staying current on legal requirements, and building a company-wide culture of responsibility. By treating your policy as a living document, you establish a durable framework for AI governance that can scale with your business and adapt to changes effectively.

Review and Update Your Policy Regularly

Your AI policy should be scheduled for review at least annually, or whenever a significant change occurs – like a shift in your data strategy or entry into a new vertical. Evaluate the effectiveness of your program by monitoring factors like the number of model failures, compliance audit outcomes, employee training completion rates, and others. These regular check-ins are your opportunity to assess whether the policy’s principles are being applied effectively and if they still align with your operational reality. This continuous improvement cycle helps you avoid pitfalls like biased decisions or data breaches, build trust with customers and employees, and confirm your AI systems are performing as intended.

Stay Informed on AI Regulations

The legal landscape for artificial intelligence is evolving quickly, with new laws and standards emerging across the globe. Staying ahead of these changes is not just about compliance; it’s a competitive advantage. While some companies may struggle to adapt, leading organizations already have the governance and tools in place to meet the compliance requirements of new regulations like the EU AI Act. A proactive stance allows you to anticipate requirements, adjust your policy accordingly, and demonstrate a credible commitment to lawful and ethical AI operations.

Foster a Culture of Responsibility

A policy document alone cannot create an ethical AI environment. True responsibility is built through a shared organizational culture of accountability and transparency. Your AI Policy should make it clear who is responsible for what parts of AI oversight. Supplement this with comprehensive AI employee training that moves beyond theory to address the practical, day-to-day complexities your teams face when using AI. Equip your employees with the knowledge to identify potential risks and the confidence to raise concerns through clear, accessible channels. When your entire team understands the “why” behind your AI policy and feels empowered to uphold its principles, your governance framework becomes much more than words on a page – it becomes a collective practice..

Get Started with Your Responsible AI Policy

Conclusion

Done well, your Responsible AI Policy forms a strategic foundation that turns ethical intent into operational reality. In a rapidly evolving AI landscape, this policy helps your organization build trust, navigate legal and reputational risks, and create systems that serve people fairly and transparently. By embedding accountability, oversight, and continuous learning into your AI practices, you not only stay ahead of regulatory demands – you lead with integrity.

Related Articles

- Responsible AI Standards | Definition In Plain English

- AI Governance Framework: Implement Responsible AI in 8 Steps

- Understanding Ethical Considerations | AI Governance Tools

- AI Registry: Centralize & Govern Your AI Systems – FairNow

- How Will the NTIA Advance AI Transparency? – FairNow

Don’t forget to get your free Responsible AI Policy template.

Responsible AI Policy FAQs

Why do we need a formal AI policy if our teams are already smart and ethical?

A formal policy helps ensure a consistent, scalable standard for the entire organization. It provides a shared language and clear guardrails that align your technical, legal, and business units, which is critical when dealing with high-risk AI. This document turns good intentions into a reliable, auditable process that protects the company and empowers your teams to act with confidence.

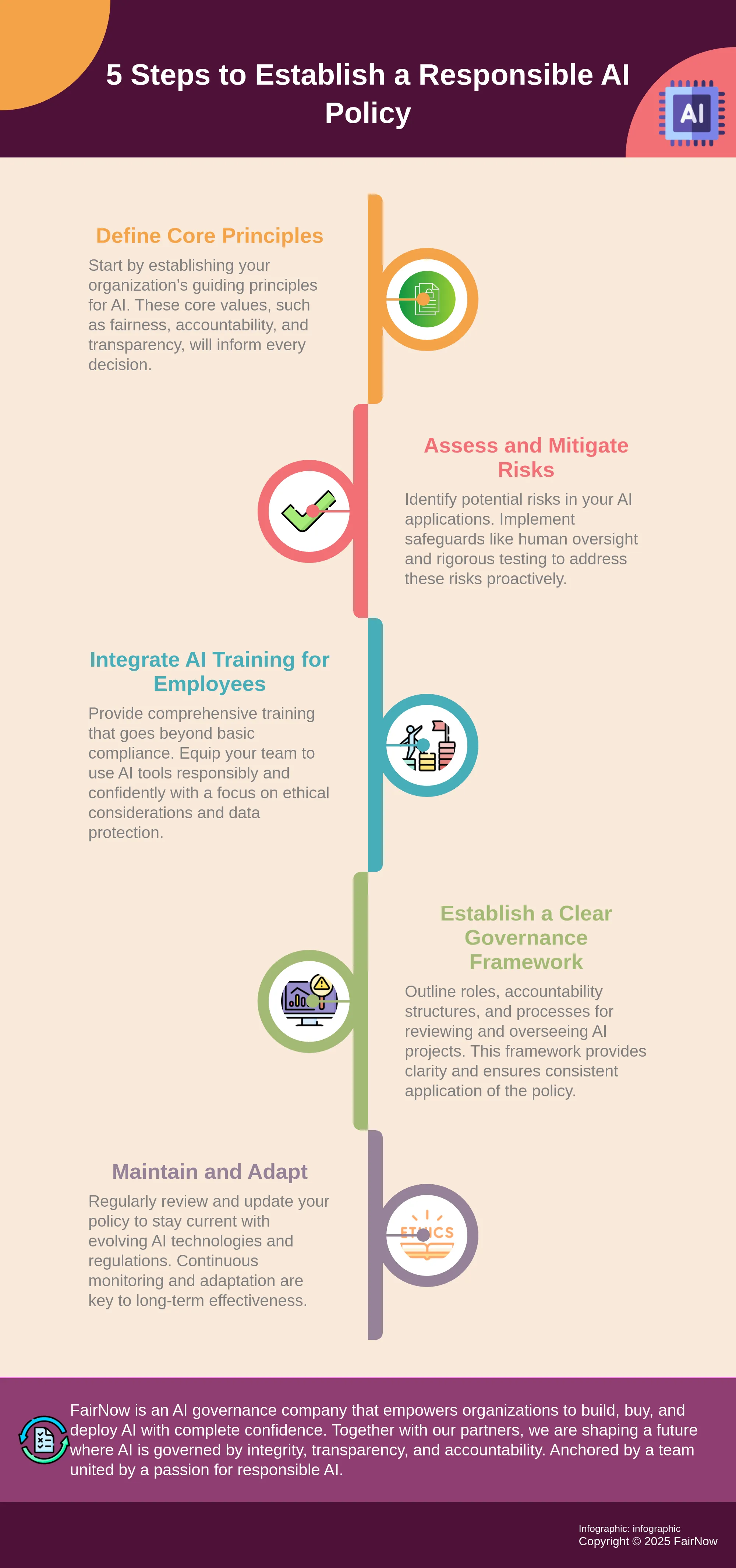

We're just beginning to use AI. What's the first practical step to creating a policy?

The best place to start is by defining your core principles. Before you write a single rule, your leadership team should agree on what values – like fairness, accountability, and transparency – will guide your AI use. This creates the foundation for all subsequent rules and processes and gives your teams a clear direction from the outset.

Who is responsible for creating and managing this policy?

Is it just a job for the legal or IT department? While data science, legal and IT are key players, a strong AI policy requires a cross-functional effort. You should form a governance committee with representatives from legal, compliance, IT, data science, HR, and key business units. AI impacts the entire organization, so its governance must reflect that diversity of expertise to be practical and effective.

How does a Responsible AI Policy differ from our existing data governance or IT security policies?

Think of it as a specialized extension of those policies. While data governance focuses on the quality and management of data and IT security focuses on protecting systems, a Responsible AI Policy addresses the unique risks introduced by automated decision-making. It covers things like transparency, testing procedures, model explainability, and human oversight, which are specific to how AI systems operate and impact people. Check out our AI Governance FAQs.

Once our policy is written, is the work done?

Not at all. A policy is a living document, not a one-time project. The technology, regulations, and your own use of AI will constantly evolve, so you must plan for regular reviews to keep the policy relevant – we recommend annual check-ins, or whenever major shifts in your organization’s AI strategy occur (e.g., a shift in risk appetite). Regular monitoring of your AI models, proper execution of policy guidelines, and ongoing team training are what truly bring the policy to life and maintain its effectiveness over time.