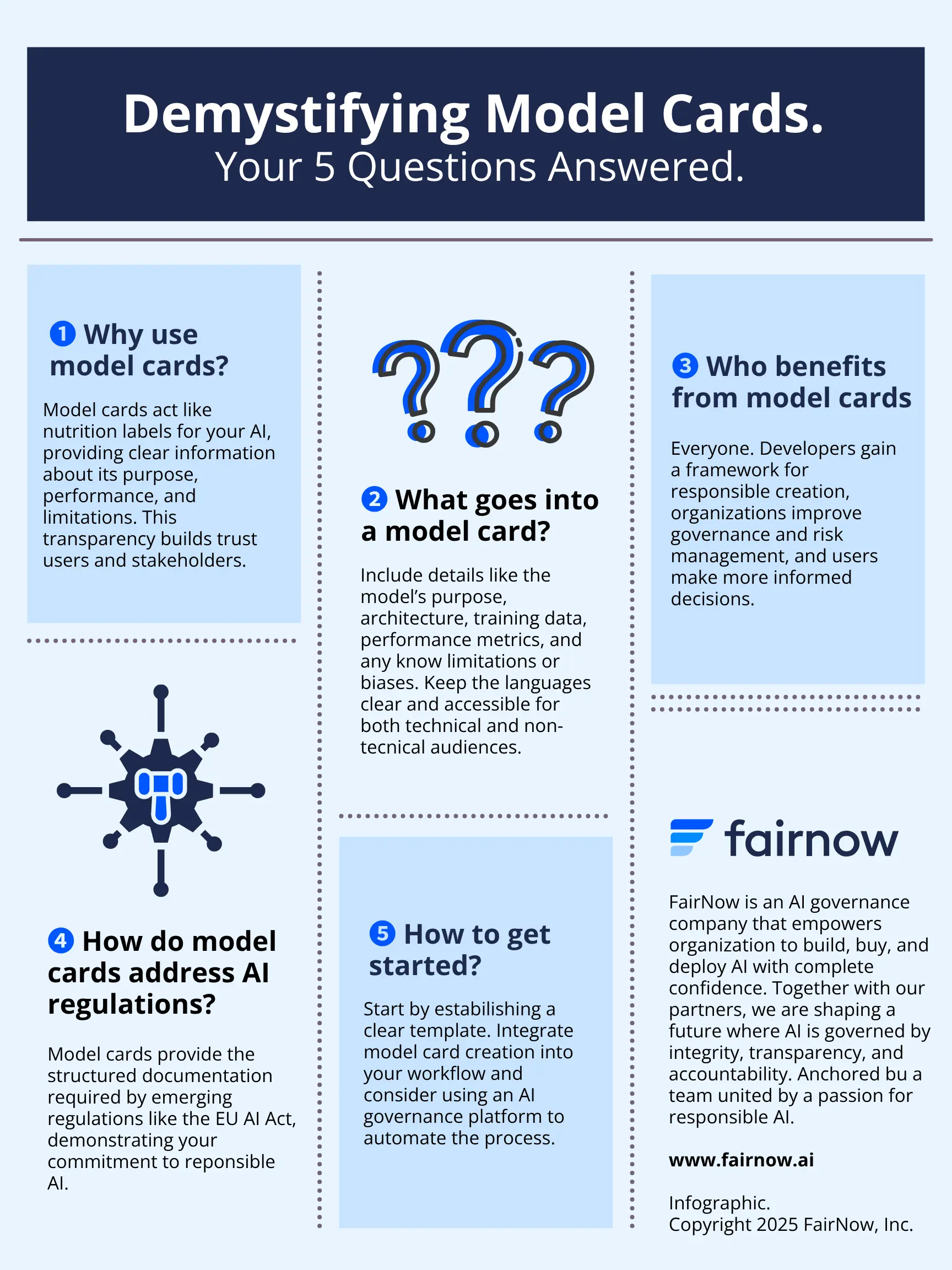

Learn what a model card report is and how it supports responsible AI by providing transparency and accountability in AI model development and deployment.

With new regulations like the EU AI Act on the horizon, the era of deploying AI without rigorous documentation is over. Regulators, customers, and other stakeholders are demanding proof that your models are fair, safe, and transparent. Simply stating that your AI works is no longer enough; you have to show your work. This is where model cards become essential. For any enterprise leader, understanding what is a model card report is now a critical part of your compliance strategy. It serves as the official record of a model’s purpose, usage, testing, and limitations, providing the evidence needed to satisfy auditors and build trust. It’s your primary tool for demonstrating due diligence in an increasingly regulated environment.

Key Takeaways

- Treat Model Cards as essential tools for transparency: They provide a standardized report on an AI’s purpose, performance, and limitations. This is the foundation for building trust with stakeholders and establishing clear accountability for every model you deploy.

- Standardize your implementation process: Create a single, clear template for all teams, write for both technical and business audiences, and integrate documentation directly into your development workflow—not as an afterthought. A consistent process is key to their effectiveness.

- Use Model Cards to strengthen governance and simplify compliance: They provide the structured documentation required to manage risk, meet current and emerging AI regulations, and align technical and business teams. Automating their creation with a governance platform makes this process scalable.

Download our editable Model Card template

Request access to our free AI Model Card template.

What Is a Model Card?

Think of a model card as a cross between a nutrition label and an instruction manual for an AI model. As machine learning models take on more high-impact tasks in areas like hiring and lending, understanding their inner workings isn’t just a good idea—it’s a necessity. A model card provides a clear, standardized report that details what a model is intended to do, how it should be used, and how it performs in different scenarios.

This transparency is the foundation of responsible AI. By documenting a model’s purpose, limitations, and potential biases, you create a clear record that supports accountability and builds trust with stakeholders, regulators, and users. It’s a simple yet powerful tool for communicating the story of your AI, from its initial design to its real-world application. For any organization serious about AI governance, model cards are an essential piece of the puzzle, providing the clarity needed to manage risk and deploy AI with confidence.

What It Is and Why It Matters

At its core, a model card is a structured overview of how an AI model was designed and evaluated. It’s a key artifact in any responsible AI framework, aiming for transparency in development. By clearly outlining a model’s components and performance metrics, model cards help everyone involved understand its specific strengths and weaknesses. This is especially critical when models are used for high-stakes decisions, like predicting health risks or financial outcomes. They provide the necessary details about how well a model works under various conditions, which is fundamental for risk management and ethical oversight.

The Anatomy of a Model Card

A comprehensive model card acts as a standardized guide, much like an instruction manual for your AI. While the exact format can vary, a useful card typically includes several key sections that provide a complete picture of the model. You can think of it as a checklist for responsible documentation.

A typical model card structure includes the model’s name and version, its intended use cases, and details about its architecture. It includes how the model should be monitored or overseen by users. It also covers the data used for training, key performance metrics, and, most importantly, any known limitations or ethical considerations. This includes potential biases or scenarios where the model is likely to underperform, giving developers and users a clear-eyed view of what the AI can and cannot do.

Why Your AI Needs Model Cards

Think of a model card as a fundamental component of your AI governance strategy. They can help satisfy compliance requirements for laws like the EU AI Act, Colorado SB205 and NYC Local Law 144. It’s not just another piece of documentation to file away; it’s an active tool that brings clarity and structure to your AI systems. For any organization serious about deploying AI responsibly, especially in high-stakes fields like HR and finance, model cards are non-negotiable. They provide a clear, consistent record of what an AI model is, what benefits (and risks) it provides, and how it should be used. This documentation is the bedrock for building systems that are not only effective but also fair, transparent, and accountable from the ground up. By making model cards a standard part of your workflow, you create a powerful framework for managing risk and building trust with everyone who interacts with your AI.

Build Transparency and Trust

Transparency is the currency of trust, and model cards are part of how you earn it. They function as a straightforward, structured overview of an AI model’s purpose, design, and performance. By clearly documenting how a model was created and evaluated, you provide stakeholders—from developers and business leaders to regulators and end-users—with the information they need to understand its capabilities and limitations. This isn’t about revealing proprietary code; it’s about being open about the model’s intended uses and known blind spots. This level of clarity helps demystify AI, making it more approachable and reliable. It represents a significant step towards responsible AI development by standardizing how models are reported, ensuring everyone has a shared understanding of the technology.

Drive Accountability in Development

Model cards introduce a necessary layer of discipline into the AI development lifecycle. By requiring a standardized report, you prompt your teams to think critically about their choices and document them for review. This process drives accountability by creating a clear record of a model’s performance metrics, especially across different demographic groups. The original framework for model cards emphasizes reporting on performance for subgroups based on factors like race or gender to proactively identify and address potential biases. When developers know they need to report these findings, they are more likely to build fairness checks into their process from the start. This documentation creates a clear line of sight into a model’s behavior, making it easier to diagnose issues and hold the right teams accountable for building equitable AI.

How to Create an Effective Model Card

Creating a model card isn’t just another box to check in your development process. It’s an exercise in clarity and responsibility. A well-constructed model card acts as a central source of truth, providing a clear, honest summary of your AI model for anyone who needs to understand it, from fellow developers to customers to the C-suite. The goal is to build a document that is both comprehensive in its technical details and accessible in its language.

Think of it as creating a user manual for your model. You need to provide enough detail for a technical user to understand its architecture and performance, while also giving a non-technical stakeholder the information they need to assess its risks and suitability for a specific use case. This balance is key. A great model card preemptively answers the tough questions about fairness, performance, and limitations, making it a foundational element of a strong AI governance framework. The process starts with knowing what information to gather and then committing to presenting it with absolute clarity.

Write for Clarity and Accessibility

A model card should communicate the most important information to both technical and non-technical audiences. It should be comprehensive enough to properly address the purpose, usage, performance, and limitations of the model, but concise enough to be accessible. The primary goal is transparency, so avoid overly technical jargon wherever possible. When you must use specific terminology, explain it in simple terms. Your audience includes compliance officers, legal teams, and business leaders who need to make informed decisions but may not have a background in machine learning.

Structure the information logically, using clear headings and bullet points to make the document scannable. Use visualizations like charts or graphs to illustrate performance metrics, making complex data easier to digest. By presenting the model’s capabilities and limitations in plain language, you help build a shared understanding across your organization. This approach demystifies the AI, making it easier for everyone to engage with its development and deployment resp

Create a Framework for Evaluation

You can’t effectively govern what you can’t consistently measure. Model cards should establish a standardized framework for evaluating an AI model’s performance and limitations. These documents provide clear, concise details on how a model performs under various conditions (both expected conditions and edge-case scenarios), creating a baseline for ongoing monitoring and validation. The process of creating a card forces development teams to rigorously test their work against a defined set of metrics.

This evaluation goes beyond simple accuracy. It can also be used to address risks like bias, evaluating performance across different demographic groups to identify potential biases related to factors like race, gender, or age.

Improve Communication with Stakeholders

AI projects involve a wide range of stakeholders, from data scientists and engineers to legal counsel, compliance officers, and business executives. Such a varied group makes it difficult to have productive conversations about risk and performance. Model cards can act as a bridge, translating complex technical information into a format that is accessible to both technical and non-technical audiences.

This shared document becomes a single source of truth that everyone can reference. It allows a compliance officer to quickly understand a model’s intended use and limitations without needing to read code. It helps a product manager explain the model’s capabilities to customers. As the Model Card Guidebook highlights, this documentation is meant for a diverse audience. By creating a common language, model cards facilitate clearer communication, streamline approvals, and ensure everyone is aligned on the model’s purpose and risks.

How Model Cards Empower AI Users

Model cards aren’t just technical documents for developers; they are essential guides for everyone who interacts with an AI system. They also serve as a user manual for your AI. They translate complex technical information into practical insights, giving your teams the context they need to use AI tools responsibly and effectively. They should clearly explain technical instructions for use, what settings or options the user controls, how results should be interpreted, and what usages require additional human oversight or should be avoided altogether. For organizations that sell AI to customers, it should also explain how they should monitor the AI system after deployment.

When users understand the ‘how’ and ‘why’ behind an AI’s output, they move from being passive recipients of technology to active, informed participants. This shift is fundamental to building a culture of trust and accountability around AI within your organization. It empowers your people to ask the right questions and use AI with confidence.

Make More Informed Decisions

When your team uses an AI tool, they need to trust its outputs. Model cards build that trust by providing clear, concise details on how a model performs under various conditions. These reports show performance metrics across different demographic groups, such as race or gender, to clearly highlight potential biases. This transparency is critical. For example, an HR manager using an AI-powered hiring tool can consult its model card to see if it was tested for fairness across different populations. This allows them to make more informed decisions and mitigate risks, rather than blindly accepting the AI’s recommendations. It gives them the power to use the tool as an aid, not an absolute authority.

Understand a Model’s Strengths and Weaknesses

Every tool has its limits, and AI is no exception. A model card clearly outlines an AI’s intended uses, capabilities, and, just as importantly, its limitations. By detailing how a model was trained and evaluated, it gives users a realistic picture of where it excels and where it might fall short. This knowledge is crucial for selecting the right model for a specific job and avoiding its use in inappropriate contexts. For instance, a model card might specify that a fraud detection model performs less accurately on certain transaction types. This helps your team understand a model’s purpose and limitations, preventing misapplication and improving overall operational effectiveness.

Common Challenges of Implementation

Model cards are a powerful tool for AI transparency, but putting them into practice comes with its own set of hurdles. Simply deciding to create them is the first step; the real work lies in executing them effectively. Many organizations struggle because the process isn’t as simple as filling out a template. To build a truly responsible AI program, you need to anticipate these challenges and create a strategy to address them. Let’s walk through the most common obstacles.

The Lack of Standardization

One of the biggest frustrations in implementing model cards is the absence of a universal standard. Different teams and vendors often have their own ideas about what makes a model card “complete.” As researchers have noted, documentation for machine learning models often provides “very little information regarding model performance characteristics, intended use cases, [or] potential pitfalls.” This inconsistency makes it difficult to compare models or trust that the information is comprehensive. Without a clear, organization-wide framework, your model cards can become a collection of documents with varying levels of detail, defeating their purpose of creating clear and consistent AI documentation.

Balance Technical Details with Simple Language

Model cards must serve a diverse audience, from the data scientists who build the models to the business leaders who approve them. This creates a significant communication challenge: how do you provide enough technical detail for an expert review without overwhelming a non-technical stakeholder? A model card should function as a “boundary object,” an artifact that people with different backgrounds can use. If the language is too technical, it fails to provide transparency to decision-makers. If it’s too simple, it lacks the necessary rigor for developers and auditors. Finding that balance is critical for a model card to be effective.

Address Ethical Blind Spots

A model card isn’t just about performance metrics; it’s a tool for ethical oversight. The challenge is that identifying every potential ethical pitfall is incredibly difficult. It requires you to go beyond standard accuracy scores and actively look for biases, safety issues, and other risks. The development team must consider which risks apply from use of the model, measure the potential impact of those risks, and decide how to mitigate them appropriately. Overlooking this process can lead to deploying a model that seems fair on the surface but causes real harm.

How to Overcome Implementation Hurdles

Putting Model Cards into practice presents a few common roadblocks, from inconsistent documentation to the sheer effort of manual creation. But these are far from insurmountable. With a clear strategy, you can build a process that makes creating and maintaining Model Cards a seamless part of your AI development lifecycle. The key is to focus on standards, integration, and the right technology.

Integrate Cards into Your Workflow

Model Cards shouldn’t be an afterthought created right before deployment. To be truly effective, they must be a living document that evolves with the model. Integrate the creation and updating of Model Cards directly into your development workflow, just like code reviews or security scans. Model Cards should be updated whenever there are significant changes to the model, how it’s used, or who it’s used on.

This process should include documenting how the model performs on different data subsets and explicitly stating its intended uses and limitations. This practice helps you identify potential performance gaps or areas of concern early on. By making Model Cards an integral part of your machine learning development process, you turn transparency from a final-step chore into a continuous, value-adding habit.

Use the Right Tools and Frameworks

Manually creating and updating Model Cards for every model is not scalable, especially in a large enterprise. It’s time-consuming and leaves room for human error. This is where automation becomes essential. The right tools can pull information directly from your development and monitoring systems to populate sections of the Model Card automatically.

Platforms designed for AI governance can streamline this entire process. With a centralized solution like FairNow, you can automate risk tracking, manage compliance, and generate consistent documentation across all your models. Using a purpose-built framework removes the manual burden from your teams, allowing them to focus on building great models while the system handles the necessary transparency reporting. This approach makes responsible AI adoption achievable at scale.

The Future of Model Cards

Model cards are more than just a good practice; they are quickly becoming a cornerstone of responsible AI strategy. As organizations scale their use of artificial intelligence, these documents will play a critical role in managing risk and complying with a complex web of new rules. Their future lies not as static documents, but as dynamic tools integrated directly into the AI lifecycle. This evolution is being driven by two major forces: the rapid emergence of AI-specific regulations and the growing need for comprehensive AI governance frameworks to manage models at scale. For any organization serious about deploying AI ethically and effectively, understanding this trajectory is essential for staying ahead.

Adapting to New AI Regulations

As governments worldwide roll out new rules for artificial intelligence, model cards are shifting from a “nice-to-have” to a “must-have.” Regulations like the EU AI Act place a heavy emphasis on transparency and documentation, requiring organizations to prove their models are fair, safe, and perform as intended. A well-constructed model card serves as clear evidence of this due diligence. It provides regulators with a standardized, easy-to-understand report on a model’s purpose, limitations, and testing protocols. This proactive documentation helps you meet compliance obligations and demonstrates a foundational commitment to responsible AI, building trust with both regulators and the public.

Integrating with AI Governance

Model cards are most powerful when they are woven into the fabric of your organization’s AI governance framework. Instead of being an afterthought, they should be a living document that connects development, risk management, and compliance. Integrating model cards into your workflow creates a centralized, authoritative record for every model you deploy. This approach provides a clear line of sight into your entire AI ecosystem, which is critical for maintaining control and accountability. As explained in the NIST AI Risk Management Framework, effective governance requires structured documentation to map, measure, and manage AI risks, and model cards are the perfect tool for the job.

Related Articles

- Model Governance – AI Governance Glossary – FairNow

- Future of AI Governance: Insights from Model Risk Management – FairNow

- Transparency of AI systems | Artificial Governance Platform

- Model Validation | Ensuring Reliability and Fairness

Download our editable Model Card template

Request access to our free AI Model Card template.

AI Model Card FAQs

Why can't my technical team just use their existing documentation instead of creating a model card?

Think of it this way: your technical documentation is written for other engineers. It’s deep, specific, and focused on the “how.” A model card is written for everyone. It acts as a bridge, translating complex technical details into a clear, accessible format that your legal, compliance, and business leaders can use to make informed decisions. It answers the “what” and “why” questions, focusing on a model’s purpose, performance, and limitations in plain language.

Do we really need a model card for every single AI model we use?

The level of detail in your model card should match the level of risk. For high-stakes models used in areas like hiring or lending, a comprehensive model card is non-negotiable. For smaller, internal models with low impact, a more streamlined version might be appropriate. The goal is to create a consistent habit of documentation. Starting this practice even on a small scale builds the discipline required to govern your most critical AI systems responsibly.

My team is

My team is worried this will just be more administrative work. How do we make this process efficient?

The key is to integrate model card creation directly into your development workflow, not tack it on at the end. Treat it like a code review—a necessary step before deployment. You can make this process much smoother by establishing a clear, standardized template for everyone to follow. Better yet, use AI governance platforms that can automate much of the data collection, pulling performance metrics and other information directly from your systems to populate the card.

What if our model has known weaknesses or biases? Should we really put that in the model card?

Yes, absolutely. A model card is a tool for transparency and risk management, not a marketing brochure. Being upfront about a model’s limitations is a sign of responsible development and is fundamental to building trust. Documenting where a model might underperform allows your organization to create proper safeguards, avoid using it in the wrong contexts, and protect yourself from unintended consequences. Hiding these facts is what creates real liability.

How does a model card actually help with new AI regulations?

Upcoming regulations, like the EU AI Act, require organizations to provide clear documentation on how their AI systems are built, tested, and monitored. A model card serves as structured, tangible proof of your due diligence. It provides regulators with a clear report on a model’s intended use, performance metrics, and fairness evaluations, directly addressing the transparency requirements at the heart of these new laws.