The Question Everyone Is Asking, When Does The EU AI Start?

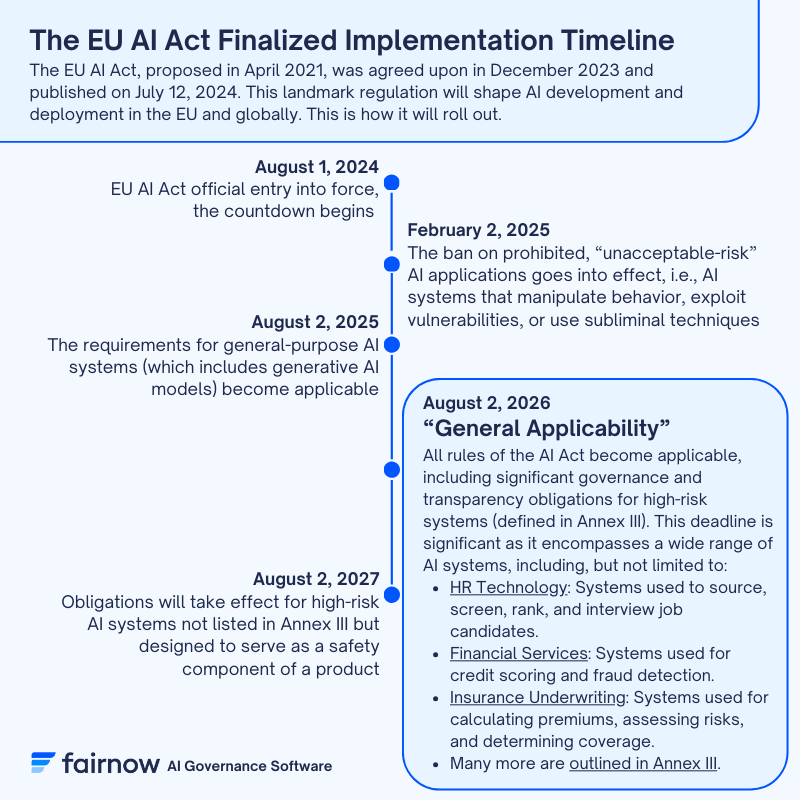

EU AI Act timelines for enforcement will featured a staggered rollout.

Businesses must thoroughly understand the risk level and details of their AI usage in 2024 to prevent consequences with the earliest rollouts of the Act.

This is what we know so far and how you can stay ahead of these regulations as they evolve.

A Quick Review Of The EU AI Act Timeline Thus Far:

The EU AI Act has made some significant progress since its proposal in April 2021.

April 21st, 2021: The European Commission introduced a proposal to regulate artificial intelligence in the European Union. Alongside the AI Act, they also unveiled a coordinated plan that outlines collaborative actions between the Commission and member states.

June 14th, 2023: The European Parliament approved its stance on the AI Act with 499 votes in favor, 28 against, and 93 abstentions.

December 8th, 2023: A political agreement was reached among European decision-makers, the European Parliament, and the Council of the EU.

August 1, 2024: Act enters into force.

So, What Happens Next With The EU AI Act?

February 2, 2025: Ban on “unacceptable-risk” AI applications begins.

Unacceptable or prohibited uses of AI are AI systems that threaten an individual’s fundamental rights.

Unacceptable risk systems encompass those with a high potential for manipulation, either through subtle messaging and stimuli, or by taking advantage of vulnerabilities such as socioeconomic status, disability, or age.

These are categorized into:

- AI systems or apps that secretly influence human behavior (subliminal practices).

- Manipulative tactics, like toys with voice assistance that encourage risky behavior in children

- Social evaluation systems (social scoring) by governments or companies will be prohibited.

- Some uses of biometric systems, like emotion recognition at workplaces, will be prohibited.

- Additionally, certain systems for categorizing people or instantly identifying them remotely in public spaces for law enforcement purposes will be restricted, with a few exceptions.

August 2, 2025: Requirements for general-purpose AI systems (including generative AI) apply.

General-purpose AI systems are versatile AI that can be used for many things. Foundation models are large AI systems that can do various tasks, like generating text, images, or computer code.

The AI Act focuses on rights and transparency for foundation models and requires assessments for high-risk AI systems used in insurance and banking.

Specific obligations for general-purpose AI systems that could pose major risks include:

- Risk assessment using advanced methods

- Ensuring strong cybersecurity

- Monitoring and disclosing energy usage

Providers must also follow copyright laws and provide details on how they trained the AI model.

Additionally, there’s a rule to register in a European database, which might cause legal disputes with material rights owners regarding copyright and privacy laws.

August 2, 2026 (General Applicability): Enforcement of the full EU AI Act.

This is the big one, no matter which industry you operate in.

You will want to keep meticulously detailed records of each AI system you build, buy, and use.

This is also considered “general applicability” as full implementation is enforced, including rohbust governance requirements for high-risk systems in:

-

- HR Technology

- Financial Services

- Insurance Underwriting

- Education and Vocational Training

- Many other areas listed in Annex III

The enforcement of the EU AI Act in 2026 will present several challenges for businesses:

1. Definition of AI: The current definition of AI in the Act is broad and could cover statistical software and even general computer programs. Tech companies have requested a narrower definition of ‘AI systems.’

2. Prohibition on Social Scoring: This could impact credit and insurance industries as it could limit their ability to assess risk.

3. Privacy and Personal Rights: The Act addresses the potential for AI systems to infringe on privacy and personal rights due to their ability to process vast amounts of data.

4. Liability: The Act will give people and companies the right to sue for damages after being harmed by an AI system.

These issues will make inventory assessment more complex and classification more challenging.

It’s important for businesses to prepare for these changes and understand how they will impact their operations.

August 2, 2027: Rules apply to high-risk AI systems serving as safety components (not in Annex III). More on that here.

This timeline highlights the gradual implementation of the Act, emphasizing the need for businesses to prepare for compliance, especially those using high-risk AI systems across various sectors.

Do You Have to Follow the EU AI Act?

Well – of course, you don’t have to do anything. But you are going to want to take the EU AI Act very seriously.

Violation of the EU AI Act carries SIGNIFICANT fines.

These fines are set to range from 7.5 million euros or 1.5% of a company’s revenue (whichever is higher) – to a striking 35 million euros or 7% of their global yearly revenue.

To put things in perspective, these fines are much higher than what GDPR imposes, which ranges from 2-4% of annual revenue.

Of course, fines are just one aspect of the risk the company takes on by playing fast and loose with AI regulations.

It’s important also to consider:

- reputational damage

- data privacy concerns

- additional legal risks

- bias in decision-making

- increased third-party risks

- security vulnerabilities

What Should You Do Now?

This staggered rollout aims to simplify rule-making and encourage companies to follow these rules voluntarily.

Strategic leadership teams are already preparing for the upcoming regulations.

You have a few options when it comes to tracking and implementing protective measures in advance of the EU AI Act’s full adoption.

The first, of course, is to read through hundreds of pages of proposed legislation and highlight the most important clauses in regard to your company.

Or, let us handle that!

Two Options to Stay on Top of Upcoming Regulations Depending on Your Size, Industry, and Risk Tolerance

1: Explore our EU AI Act preparedness guide to learn more about how you can get into compliance – fast – with the EU AI Act.

2: If you’re a large organization, you will want to implement AI governance software to centralize and simplify the process.

If that’s the case, you’ve come to the right place.

Fortune 500 companies depend on FairNow for continuous model testing, regulatory compliance tracking and prep (e.g. NYC LL144, EU AI Act), and bias assessments.

Let us know what you need help with here, and we can show you which tools are going to make your life a lot easier.

Be Ready for the EU AI Act

Is reading hundreds of pages of upcoming legislation not really your thing? Good news! It’s ours! Enter your email to stay informed and on track in real-time.

Keep Learning