Key takeaways:

- An acceptable AI use policy is designed to help organizations clarify how their employees can use AI at work.

- AI usage policies can foster AI innovation while reducing the risk that employees misuse AI and create risk.

- AI usage policies should include sections on AI principles, technology considerations, approved and prohibited uses, the process for getting a new use case reviewed, and approaches to compliance monitoring.

- Organizations should track AI adoption by employees to ensure that employees continue to use AI safely. AI governance platforms like FairNow can help streamline and automate review.

Download FairNow’s free Employee AI Usage Policy Template here and learn more about AI Usage Policy content in our detailed guide below.

Why Download This Template?

-

Establish clear AI usage guidelines for employees

-

Reduce risk from unsanctioned or unsafe AI tools

-

Accelerate the adoption of safe, innovative AI without slowing teams down

-

Easily customize for GDPR, NYC LL144, ISO 42001, and other compliance needs

Workplace Adoption of AI

Generative AI has become increasingly common in the workplace since the release of OpenAI’s ChatGPT in 2022.

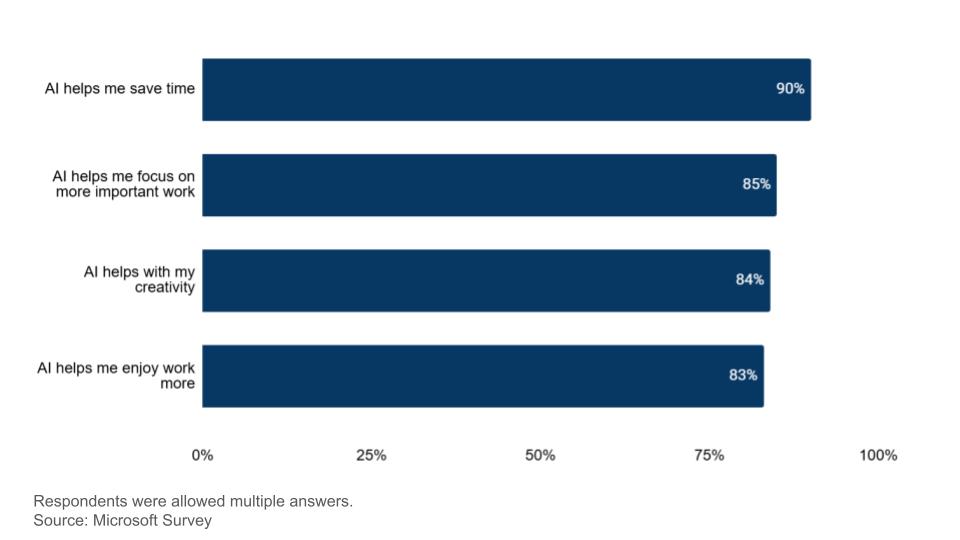

According to a recent survey of over 30k employees globally, 75% of employees are already using AI at work, with nearly 78% of AI users bringing their own AI to the office. Workers use AI primarily to save time, increase their creativity, and refocus on the most important and enjoyable work tasks.

Why are employees using AI? (Microsoft Survey – 2024)

But there are real risks to adopting new AI capabilities in the workplace. These risks can be performance-related, reputational, or legal/regulatory.

Employees Adopt AI That Creates Risk For Organizations

We’ve consulted with companies across domains on AI adoption and governance. While AI usages originated by employees can be extremely high value, we’ve also come across uses that are risky or unsafe.

Examples of unsafe or risky AI usage behaviors can include:

- Data Privacy: A leader enters confidential company information into a GenAI tool, not realizing that the foundation model provider now has access to all of this data to train its models on.

- Poor Performance: A legal associate asks GenAI to cite references to prior court cases and uses them in a brief without validating accuracy. The associate didn’t realize that GenAI fabricated the citations – called hallucination.

- Bias Issues: A manager uses AI to draft a performance review for their associate. The AI tool generates a result that contains bias, opening the company to legal risk if the employee ends up suing for discrimination.

- Regulatory Risk: an employee uses GenAI in a way that is prohibited by key laws like the EU AI Act, and opens the company to significant fines (which – for the EU AI Act – can include up to 7% of a company’s annual revenue).

- Security Breaches: a tech team adopts an AI agent to automate a series of processes, not realizing that the agent created backdoor access to their internal systems.

What is an Acceptable AI Use Policy and Why Is It Important?

An AI usage policy is a document designed to help employees understand which uses of AI are permitted within the organization. It defines which uses are acceptable, and outlines the process by which an employee can get their specific use cases reviewed for approval.

There are multiple benefits to creating an AI Usage Policy:

- Clarity: AI use policies ensure employees have a clear understanding of where and how they are able to adopt their own GenAI tools within the workplace and for business purposes.

- Reduced Risk: when an AI use policy outlines which AI can be adopted (and under what conditions), employees are less likely to violate terms or use AI in unsafe ways. Employees have clear guardrails for acceptable use.

- Increased AI Innovation: increased clarity about acceptable and unacceptable uses enables employees to feel confident with safe experimentation, and to lean into adoption that they might otherwise have avoided out of concern for corporate policy.

The primary intent of an AI usage policy is to create transparency for associates. When it’s written well, AI usage policies help associates feel comfortable adopting AI in a safe way while reducing uncertainty and risk.

An Acceptable Employee AI Use Policy Template (with example text)

A comprehensive AI usage policy will contain the following five sections:

- The organization’s risk appetite and AI usage principles.

- The set of acceptable uses of AI within the organization.

- The AI technologies that employees can leverage.

- The process for requesting review of an AI use case.

- AI usage monitoring for risk and compliance.

Section 1: Risk Appetite and AI Usage Principles

In this section, the organization should clarify its stance on AI in the workplace.

Example text for two different companies with different risk tolerances might include:

- “As an innovation-first organization, we thrive on the adoption of new technologies that benefit our customers, our employees, and the business as a whole. We strongly encourage our employees to test new AI technologies and surface novel use cases for the benefit of the organization.”

- “As a company, we believe in the power of technology to drive positive change. We also put our customer safety at the forefront and are committed to not taking unnecessary risk with unproven technology. This means that all potential AI use cases will be thoroughly reviewed and vetted before they are adopted.”

The company should also articulate its AI usage principles, which will serve as a north-star for employees. Examples might include a focus on data privacy, transparency, fairness, and ethical considerations.

Below is an example template for key AI usage principles:

Section 2: AI Technologies Employees Can Use

In this section of the AI usage policy, the organization should outline which tools that employees will be allowed to use to conduct work-related activities.

An organization’s AI or technology group is responsible for reviewing which technology and capabilities will be approved for internal use. Their review should include and assessment of:

- Foundation models like ChatGPT, Gemini, and Claude

- Internal instances of AI created by fine-tuning open source models

- Specific vendor AI embedded in an organization’s technology stack that can perform tasks like summarizing meeting notes, reviewing emails, and synthesizing messages on internal platforms (e.g., Zoom, Slack, Gmail, etc.)

In many cases, an organization will sign specific contracts with AI providers to ensure internal data is secure and not used for further model training or sale. Organizations are likely to have preferred providers with contracts in place.

Prohibiting AI use for business on personal devices: Organizations typically prohibit employee use of AI for business on personal devices like laptops and cellphones. Where this is the case, those requirements should also be clearly outlined.

Section 3: Acceptable AI Uses

This is a core section of any AI usage policy. Section 3 defines which uses are always allowed, which are always prohibited, and which may be allowed with further guidance and review.

In this section, organizations should create guardrails for which AI usages, and also provide examples to ensure that employees have clarity on what is meant by these guardrails. Below are example guardrails to consider:

Acceptable AI uses:

- Doesn’t involve use of sensitive company or personal data

- Has a limited impact (e.g., may synthesize meeting notes rather than making a key business decisions)

- Doesn’t incorporate demographic data or proxies for users, which can create risk of bias

Prohibited AI uses:

- Model is trained on or uses protected data without consent

- Usage is likely to elevate risk of data leakage (e.g., entering sensitive data into a public interface)

- Usage tied with an unacceptable risk that is explicitly outlawed by key regulations like the EU AI Act or considered inappropriate based on internal ethical guidelines

AI uses that may be acceptable but requires further review:

- AI that could influence material decisions for employees or customers (e.g., hiring)

- AI uses that could be externally-facing to customers

- AI that includes any use of company confidential or personal data

Organizations should align their acceptable AI usage principles with their risk appetite and core industry/function. Common use cases for an organization – e.g., using AI to summarize meeting notes – should be called out directly.

Section 4: Process for Requesting Use Case Review

While there will be some clear approved or prohibited use cases that employees will encounter, many new uses for GenAI will not be clear-cut: they may offer significant benefit to the business, but could also pose meaningful risks.

Organizations should establish a clear process by which employees can suggest new use cases for further review.

These reviews often involve central intake and evaluation by one or more experts on AI risks/benefits, and will include a committee of:

- Business leader(s): provide business context

- Data privacy officers or specialists: evaluate risk of data leakage

- Legal, regulatory, or compliance leaders: ensure scope and compliance with key regulations

- Technology or cyber experts: evaluate cybersecurity risks and voulnerabilities

An organization’s AI usage policy will include details about how an employee can submit their use case, an initial intake form with relevant detail, and a view of next steps in the review process and expectations.

In our experience, once an organization sets up this review process, they can receive tens to hundreds of potential AI use cases for review from employees in the following weeks and months.

The process of collecting relevant information, triaging risk and compliance views, and keeping track of approved and prohibited AI usages can become overwhelming if the process isn’t streamlined. To help keep track of AI usages, many organizations turn to AI governance platforms to help them stay on top of varied use cases and risks.

Section 5: AI Usage and Risk Monitoring

Reviewing and approving an AI use case is usually not the last component of safe AI adoption – especially for AI that has high value but higher risk. Oftentimes, AI usage will come with conditions that may involve ongoing monitoring or review of outcomes.

For example, a high-risk AI use cases in HR might involve an AI tool that helps recruiters rank-order potential candidates for a role. This can save recruiters and hiring managers significant amounts of time and help them get to better outcomes – but it is critical to review a technology like this for performance and bias on an ongoing basis. Once the AI usage has been approved, the organization may require ongoing testing and review of outcomes to ensure that the AI usage continues to be appropriate over time.

The AI usage and risk monitoring section of an organization’s AI usage policy should outline how employees are expected to engage with and ensure safe use of the AI on an ongoing basis.

Monitoring AI and Flagging Misuse of AI Usage

Implications for misuse of AI can create significant risk for organizations, employees and customers. This makes it critical for organizations to track AI adoption and ensure that employees engaging with the new technology safely.

Organizations can monitor for AI misuse in two core ways:

- Blocking employee access to unsanctioned vendor AI tools

- Monitoring prompt logs for sanctioned tools to ensure employees aren’t engaging in any explicit misuse (e.g., use of AI in unethical, prohibited, or illegal contexts)

Typically, AI usage policies are connected to an organization’s Code of Conduct, and companies may monitor for compliance in the same way that they monitor other violations of a company’s internal guidelines.

How an AI Governance Platform Can Help

Reviewing employee adoption of AI across an organization can become a substantial effort.

Employees – focused on innovation, productivity, and elevating business outcomes – can generate varied and creative uses for AI. But evaluation and monitoring of high-risk, high-value use cases can quickly become taxing.

AI governance platforms like FairNow can reduce the burden of reducing AI risk and staying compliant by:

- Tracking AI adoption across the organization

- Centralizing AI risk assessments and the review process

- Flagging risks and compliance concerns for proposed use cases

- Operationalizing AI governance approval workflows

- Automating and streamlining monitoring of existing AI tools

Looking to simplify AI governance for your organization? Request a free demo.

Frequently Asked Questions

Who should use this template?

This policy template is designed for organizations of all sizes. It can be applied to employees, contractors, and third-party vendors who interact with AI systems or tools in the workplace.

Is it editable?

Yes. The template is fully customizable so you can adapt it to your organization’s specific requirements, industry standards, and risk profile.

What frameworks does it reference?

The template aligns with established AI governance and compliance frameworks, including ISO/IEC 42001, the EU AI Act, and New York City Local Law 144 (NYC LL144).

Keep Learning