Overview

In this article, we provide a comprehensive understanding of the ISO 42001 standard, its key components, implementation considerations, and strategic implications.

We’ll explore how organizations can simultaneously foster innovation and mitigate risks by leveraging ISO 42001 — and why responsible AI management is fast becoming a baseline expectation.

Download FairNow’s free ISO 42001 Certification Playbook here and learn more about the ISO 42001 AI governance standard content in our detailed guide below.

What Is ISO/IEC 42001 and Why It Matters?

The recent, rapid explosion in AI’s capabilities has left many companies scrambling to keep up. At the same time, regulators around the world have begun to roll out rules and laws, but so far their efforts have been scattered and varied.

Even as leaders and companies try to remain innovative, deploying AI in high-risk scenarios like employment and healthcare creates valid ethics and safety concerns. To address this, many organizations are turning to ISO 42001—the first international standard for AI management systems—as a blueprint for responsible, risk-based AI governance.

Released in 2023 by the International Organization for Standardization and the International Electrotechnical Commission, ISO/IEC 42001 is an international standard that provides a structured approach to govern, develop, and deploy AI responsibly—across use cases, industries, and risk levels. The standard offers flexibility while promoting consistency, helping organizations manage AI in a way that supports both innovation and accountability.

The standard arrives at a critical juncture. Governments worldwide are considering AI-specific regulations. In the US, with federal AI regulation looking unlikely, a patchwork of state and local laws—such as the Colorado AI Act and New York City Local Law 144—have passed and require testing and governance around AI used in high-stakes decision making.

Why ISO/IEC 42001 Is Becoming a Strategic Imperative

ISO 42001 is a voluntary standard, and many leaders find themselves wondering whether adopting ISO 42001 is worth the effort. There are multiple reasons why ISO 42001 is becoming a strategic imperative for forward-looking leaders in AI.

A strong foundation for AI regulatory compliance

The regulatory environment for AI is fast evolving and extremely complex. The EU AI Act passed in 2024. Its prohibitions on specific uses of AI entered into force in February 2025, and other requirements for risk management, documentation, and governance will follow in the coming years.

South Korea, Brazil, the United Kingdom, and many other countries have passed or are advancing rules of their own. The ISO 42001 standard provides a strong framework for AI governance that can dramatically simplify global compliance efforts down the road.

Trust as a competitive differentiator

Unlike ISO 27001, ISO 42001 is relatively new and has not yet been broadly mandated by buyers of AI tools. However, technology leaders incorporating AI in their solutions are finding ISO 42001 to be a competitive differentiator in their sales cycles.

ISO 42001 provides companies a way to demonstrate AI governance maturity in the sales cycle, particularly in regulated industries or procurement-driven environments.

Flexibility across domains

The standard’s flexible, risk-based approach enables organizations of all sizes and industries to build trust in their management of AI. Organizations can intentionally design their own approaches to ensure they are governing their AI efficiently and effectively.

A Look Inside the Standard: How is ISO 42001 Structured?

Like ISO 27001 and all ISO management standards, ISO 42001 is composed of two core sections:

- Body clauses (Clause 1 – 10): The basic structure of this first section is almost identical to ISO 27001. The clauses are primarily concerned with ensuring that an organization’s risk management and governance programs are set up to appropriately manage AI risk. This includes practices like setting AI objectives, defining roles, implementing learning and development initiatives, and enabling continuous improvement.

- Annex of reference controls (Annex A and B): Although other ISO standards also have annexes of reference controls, this section’s contents are much more specific to the needs of AI governance. Some of these controls exist to execute the body clauses. However, most of the 38 reference controls focus on required actions, information, or documentation for each AI system that an organization builds or buys. ISO 42001 expects extensive documentation for each AI system, including information about training and usage data, testing plans, implementation specifications, usage instructions, impact assessments, issue logging, and remediation.

Clauses 1-3: Scope, References, Definitions

These upfront sections, similar to other ISO management system standards, lay out the scope, references, and definitions relevant for the rest of the document.

Clause 4: Context of the Organization

Clause 4 describes how the organization should define and document the context and the scope of its AIMS (AI Management System).

Context includes anything relevant to your decision-making about AI. Does your organization develop AI systems, or just deploy AI acquired from others? Do you resell AI or include it in your products? What functions are or will be stakeholders in AI management, and do structures exist to assist governance? What do external interested parties such as regulators and customers need or expect?

Defining scope involves deciding what’s covered by your AIMS. This is particularly important if an organization wants to receive ISO/IEC 42001 certification–it’s possible for an organization to get certification only for certain systems, such as those that it sells to others. However, an AIMS could cover internal-only systems, including those acquired from vendors or third parties.

Clause 5: Leadership

Clause 5 describes expectations of leadership in managing and monitoring AI risk and impacts, including ensuring that leadership demonstrates oversight of AI strategy and resourcing. Leadership must establish and communicate AI policies, and define appropriate roles and responsibilities for the AIMS to achieve goals.

Clause 6: Planning

Clause 6 details a number of planning activities.

The first section describes a set of interrelated processes: conducting an AI risk assessment, enabling and prioritizing AI risk treatment, and devising a process for AI impact assessments that can inform the risk assessments. The relationship between these three steps is somewhat complicated, and the impacts, risks, and treatment cycle is perhaps the single most important component of the entire standard.

Additionally, Clause 6 requires the organization to define a set of AI objectives, both for its risk management but also for its general strategy around building, using, and deploying AI in the service of broader strategic goals. Finally, there is an expectation of orderly change management.

Clause 7: Support

Clause 7 considers the organizational support necessary for enacting an AIMS, including sufficient resourcing, defining competencies and ensuring the organization hires or trains individuals with them, spreading awareness of the AIMS and assigning clear responsibility for communication about it, and clear documentation of all of the above.

Clause 8: Operation

Clause 8 mirrors Clause 6, stating that an organization must implement controls and processes that will actually enable the activities described there, especially AI risk assessment, risk treatment, and impact assessment. It also requires that documentation be produced and retained about the results of these activities.

Clause 9: Performance Evaluation

Clause 9 focuses on evaluating how well its AIMS performs its stated objectives. Monitoring of the program’s overall performance is necessary, in addition to system-level monitoring based on risk and impact assessments.

Requirements for internal audits and management reviews of the AIMS are included in this clause.

Clause 10: Improvement

Clause 10 focuses on the steps that an organization should take to enable ongoing improvement of its AIMS.

In addition to a general commitment to continual improvement, specific processes are required to identify and log any issues or nonconformities, and then take corrective actions when deemed appropriate.

Annex A Controls

While the body clauses largely mirror ISO 27001 and other management standards in structure and content, the controls found in this section are entirely unique and distinct to ISO 42001 and AI management systems.

Annex A provides a brief list of reference controls that may be necessary to implement the requirements of the body clauses. Some of them require work at the organization level, such as ensuring that AI policies align with other policies in the organization, or setting up a channel for reporting concerns about AI use and misuse.

However, the majority of the controls in this Annex may actually need to be implemented at the application or system level. Activities such as documenting the data resources in an AI system, or devising and implementing appropriate AI system operation and monitoring, must adapt to the needs of a particular system.

These Annex controls cover the full lifecycle, from documenting initial requirements through providing necessary instructions and support for end users after deployment.

Annex B Implementation Guidance

A necessary companion to Annex A, this section breaks down each control listed above and provides more detailed implementation guidance. This guidance includes potential content to consider when satisfying each control.

For several key controls, notably impact assessment, considerable detail is provided about expected and possible content to be covered. Organizations will need to carefully review this text, alongside their own needs and other governance obligations, to build processes that fulfill ISO’s requirements while still being appropriate for their own maturity level.

Many organizations will find that the structure and organization-level controls in ISO 42001’s body Clauses parallels or expands existing management programs, such as for information security or data governance.

However, the Annex controls are very distinct, introducing novel processes and expectations that vary depending on the nature of the AI systems your organization builds or uses. The more individual AI systems that an organization builds or uses, the more complex ISO 42001 implementation becomes.

What Core Concepts Does ISO 42001 Introduce?

ISO 42001 introduces several core concepts that distinguish AI governance from traditional IT management and infosec frameworks like SOC 2 or ISO 27001. These concepts relate to the unique challenges of managing artificial intelligence, and provide a foundation to continuously govern the technology.

Risk management

The central focus of the standard is identifying and managing the risks and impacts posed by AI. The two most significant factors when thinking about the risks posed by an AI system are the data/system design, and the use cases for the system.

When thinking about risks from data and design, organizations must consider the full life cycle of a system including training data, any data input at the time of use, and the output data. Weighing the risks from how the system is used is further complicated by the fact that organizations have to both assess the intended purpose or use of the system, as well as any possible misuses.

The learning theory of artificial intelligence is evolving, adding a layer of complexity beyond that of conventional software. In predictive models, the learning methods are typically defined by AI developers.

However, with the growing emergence of agentic AI, the reinforcement learning paradigm has taken center stage. In this approach, agents are not merely trained on data; instead, they actively interact with their environments, make decisions, and adapt based on feedback.

What are some of the AI risks flagged by ISO 42001?

ISO 42001 requires organizations to identify, assess and mitigate AI risks. These include:

- Bias

- Limitations in explainability

- Performance and robustness

- Data privacy

- Broader societal impacts

ISO 42001 emphasizes that AI risks are not purely technical in nature and must consider ethical factors, legal compliance, and social acceptability.

ISO 42001 advocates for a risk-based approach to AI governance.

The standard adopts a proportional approach where the depth of governance corresponds to the potential harm of the AI system. This means the organization’s (finite) capacity is spent addressing risk effectively.

Organizations should evaluate both the severity and likelihood of risks, considering factors like:

- How the system impacts individuals, subgroups of population and society as a whole

- The criticality of decisions it influences

- The vulnerability of affected populations.

This risk assessment drives should be sufficiently deep and nuanced to appreciate the potential for harms that an AI system poses. The results of this assessment should drive subsequent management decisions about development processes, testing requirements, human oversight needs, and documentation practices.

Impact assessment

Stakeholder engagement is a critical component of ISO 42001, recognizing that AI systems can affect diverse groups with varying perspectives and priorities.

The main mechanism for this is the impact assessment. In an impact assessment, the organization must:

- Identify impacted stakeholders,

- Understand their concerns, and

- Ensure their input is factored into AI governance decisions.

For high-impact AI applications, this may involve formal impact assessments that systematically evaluate potential effects on different stakeholder groups, considering dimensions like fairness, safety, and privacy.

Transparency

Transparency and traceability requirements address the “enigma” nature of many AI systems.

To ensure transparency, ISO 42001 expects appropriate documentation throughout the AI lifecycle. This documentation must cover everything from design decisions and data provenance to testing procedures and performance limitations.

While recognizing that complete technical transparency may not always be feasible or desirable, the standard requires organizations to provide meaningful information about how AI systems operate, the logic behind their decisions, and their limitations. This documentation serves multiple purposes, from enabling internal governance to supporting external communications with users, auditors, and regulators.

Accountability

Human oversight and accountability mechanisms ensure that AI maintains appropriate human control.

ISO 42001 requires organizations to establish clear roles and responsibilities for AI governance, including executive accountability for AI impacts. The standard emphasizes that organizations must maintain meaningful human oversight proportional to the risk level, which may range from periodic reviews of low-risk applications to continuous supervision of high-risk systems.

This human-in-the-loop approach ensures that AI remains a tool that supports human decision-making rather than a replacement for human judgment in consequential situations.

Testing and monitoring

AI must be tested, both before and after deployment, to ensure it is safe and fit for purpose.

ISO 42001 recognizes that AI systems often demonstrate different behaviors in deployment than in development environments, requiring continuous monitoring throughout their lifecycle. Organizations must establish systematic testing protocols that evaluate not only technical performance but also broader impacts like fairness, safety, and alignment with intended purposes.

Testing requirements should scale with risk, meaning high-impact AI receives more rigorous validation and scrutiny than low-impact models. The organization’s monitoring must track AI performance, detect shifts in data distributions, and identify emerging risks.

This serves to create a feedback loop between AI operations and governance decisions, allowing for issues to be detected and remediated quickly.

PRO-TIP: ISO 42001 Regulatory Guide, Simplified. Learn more and subscribe to updates.

How To Get Started on ISO 42001: Upfront Preparation

ISO 42001 introduces a structured approach to AI governance by delineating controls at both the organizational and system levels.

This dual-layered framework ensures that AI governance is comprehensive and adaptable to specific organizational contexts, enabling each AI system to be managed according to its unique risk profile and operational requirements, thereby enhancing compliance and mitigating potential vulnerabilities.

Establishing and fully implementing an AI governance framework under ISO/IEC 42001 involves several phases of work that organizations should approach methodically.

1. Organization-Level ISO 42001 Controls

Organizations should begin preparing for ISO 42001 by designing and implementing their organization-level processes first. These are used to guide all of the activities that are required for each individual AI system.

Assess current risk management and governance processes

Before the organization can begin to define and implement new processes for AI governance, they should begin with a comprehensive assessment of the organization’s current AI practices and context, and divergences from ISO’s requirements.

The exercise of establishing the organization’s AI context could take many forms, such as a SWOT (strengths, weaknesses, opportunities, threats) analysis. ISO also specifies that organizations should map out their stakeholder expectations, including customers, employees, and third parties. This baseline assessment helps identify what gaps the organization must close to reach compliance.

Pro Tip: Conduct a Comprehensive Gap Analysis

Before initiating ISO 42001 implementation, perform a thorough gap analysis to assess your current AI practices against the standard’s requirements. This evaluation will help identify areas needing enhancement and inform your implementation strategy.

Define the scope of AI Management System (AIMS)

With this understanding, organizations can then define the scope of their AI Management System, and begin work on organization-level controls such as writing an AI policy, conducting an overall AI risk assessment, and devising repeatable processes for key activities such as impact assessments.

They’ll need to determine which AI activities, applications, and business units will be covered. Buy-in from senior leadership is essential: they will need to explicitly accept accountability, and define the organization’s objectives and risk tolerances around responsible AI.

The rigor of implementing ISO 42001 will vary based on the organization’s size, industry and context. Large companies may need more formal governance structures with dedicated committees, specialized roles, and comprehensive documentation. Such organizations will benefit from integrating AI governance with existing governance processes for privacy and/or information security.

On the other hand, smaller organizations can adopt more agile approaches with streamlined documentation and combined roles. They might initially focus on high-risk applications before expanding the scope of governance further.

2. AI System-Level ISO 42001 Controls

Once the organization-level controls are in place, the organization can start to operationalize its commitments for each of its AI systems.

Inventory all AI systems

The first step is to build a complete inventory of all AI systems in use by the organization, both developed in-house or purchased from third-party vendors.

This task is made more challenging by the fact that software vendors are now constantly deploying new AI tools and features within existing systems, and employees across your value chain will have to be educated on their new roles.

Compile and create AI system documentation

Once inventoried, your teams will begin to assemble application or system-level documentation. System documentation will cover design and technical specs, training data, and impact assessments of intended use cases and foreseeable misuses.

Once a system is deployed, organizations must also document how they are monitoring AI performance, measuring compliance, and any testing. Your organization will set basic requirements for these controls, but these requirements will vary by risk level.

The three major factors to consider in system risk are its training data and data inputs, the outputs of the system, and its use cases. Productivity tools such as writing aids or coding assistants may have relatively slim requirements. Documentation of internally-developed tools for high-risk use cases such as HR evaluations will have much more extensive needs.

ISO 42001 Certification Process: From Pre-Audit to Compliance

An ISO 42001 certification represents a formal attestation that an organization’s AI Management System conforms to the standard’s requirements. This certification process typically involves engaging an accredited third-party auditor to conduct a thorough assessment of the organization’s governance structures, policies, procedures, and evidence of their implementation.

The certification audit evaluates both system design (whether appropriate processes exist) and operational effectiveness (whether processes are followed in practice).

1. Conduct a Pre-Audit

Certification begins with an initial assessment (or “pre-audit”) to determine the scope of the process and plan accordingly, followed by a formal two-stage audit.

2. Kick off a Formal ISO 42001 Audit

Stage one examines documentation and system design, while stage two evaluates operational implementation through interviews, observation, and evidence review.

Successfully certified organizations receive a certificate valid for three years, with surveillance audits conducted periodically to verify ongoing compliance.

Selecting an ISO 42001 auditor

When selecting an auditor, companies have many options. Auditors must themselves be approved by national accreditation bodies that verify their competence and impartiality.

As José Manuel Mateu de Ros, CEO of Zertia, highlights, “Certifiers must be backed by top-tier bodies like ANAB. Their deep technical reviews and ongoing audits ensure credibility — it’s no coincidence that regulators, partners, and Fortune 500 clients specifically look for the ANAB stamp.”

Organizations seeking certification should select accredited certification bodies with relevant industry experience and AI expertise.

Implications of certification

Externally, certification signals to customers, partners, and regulators that an organization takes AI governance seriously, and meets internationally recognized standards for responsible AI management.

This can provide a competitive advantage, facilitate business relationships where AI trust is crucial, and potentially streamline regulatory compliance efforts. Some organizations may eventually require ISO 42001 certification from their AI vendors and partners as a baseline qualification for business relationships.

Internally, the certification process drives organizational discipline around AI governance, creating accountability for implementing and maintaining robust practices. The external assessment, led by experienced auditors, helps organizations identify blind spots and improvement opportunities. Moreover, the ongoing surveillance audit requirement ensures that AI governance remains a priority rather than a one-time initiative.

WATCH NOW: Learn more about the Pre-Audit to Certification Journey Process.

Pro Tips and Common ISO 42001 Implementation Pitfalls

In our experience, common implementation challenges include data governance complexities, particularly regarding data quality, provenance, and privacy; documentation burdens that must balance compliance needs against practicality; and ensuring vendors who provide AI are assessed according to the organization’s AI governance policies.

The organizations who adopt ISO 42001 successfully do so with staged approaches, templates and tools that simplify compliance. They must set clear prioritization based on risk, and develop AI governance capabilities across the organization through training and context sharing.

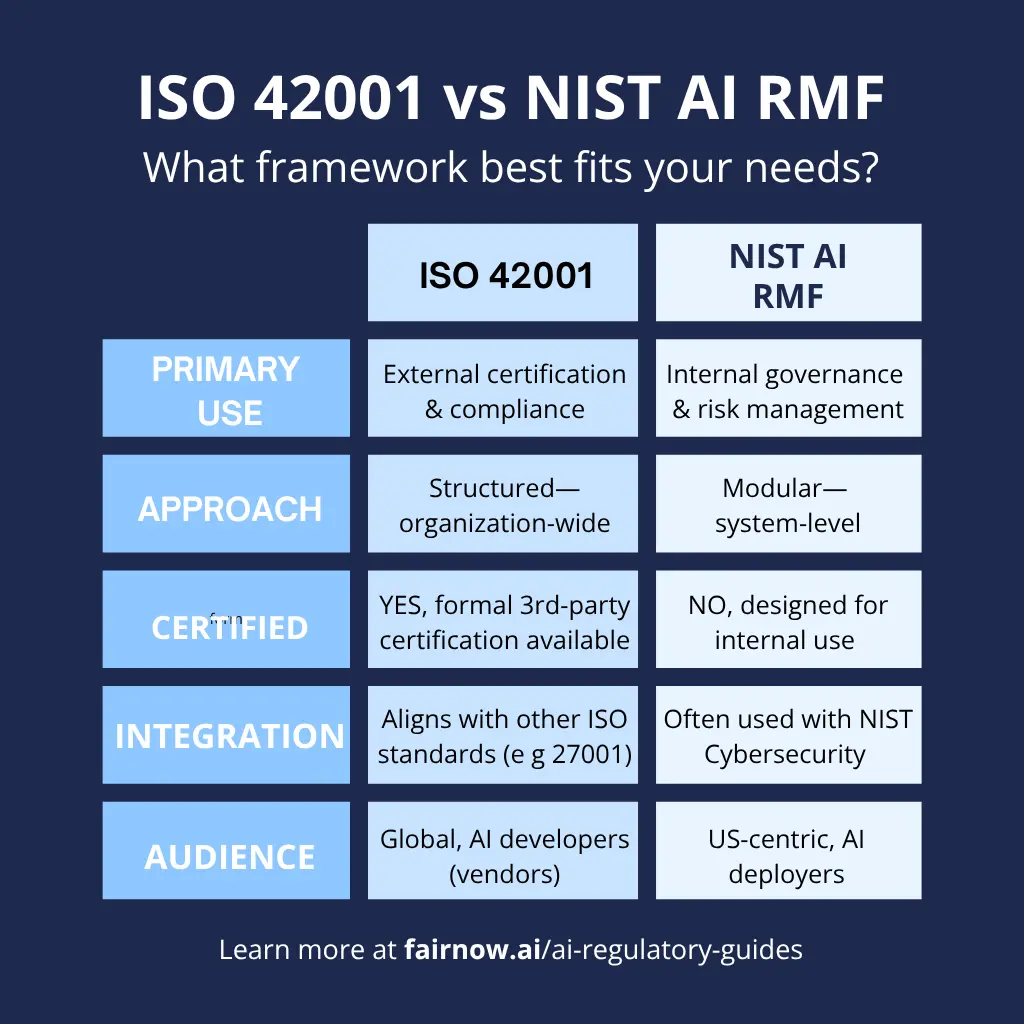

Comparing ISO 42001 with NIST AI Risk Management Framework (RMF)

The AI and data governance landscape features numerous frameworks and standards that organizations must navigate. ISO 42001’s focus on defining a complete AI management system complements other frameworks well.

What is NIST AI RMF?

The NIST AI Risk Management Framework focuses primarily on helping organizations to better understand the unique risks posted by AI systems beyond previous generations of software.

While the ISO is an independent non-governmental organization, the National Institute of Standards and Technology (NIST) is an agency of the U.S. government’s Department of Commerce. However, guidance from NIST can be useful to organizations around the world regardless of whether or not they do business in the United States.

The Framework contains two parts, plus extensive additional resources to aid in implementation. Part 1 describes the specific challenges of AI risk management, and defines a set of core characteristics that NIST argues are a requirement for all trustworthy AI systems.

Part 2 of the Framework, the Core, lays out a series of goals for organizations that will ensure AI systems conform to those characteristics. The Core is divided into four functions–Govern, Map, Measure, Manage–each of which have numerous categories and subcategories.

As a supplement to the NIST AI RMF, there is the Playbook, which NIST specifies is “neither a checklist nor set of steps to be followed in its entirety. Instead, the Playbook provides more detail on the categories in the RMF, laying out nearly 150 pages of extra context, possible action items, optional documentation, and references.

How do the NIST AI RMF and ISO 42001 compare? How should an organization choose between them?

Ready to look at how AI Governance Technology can simply how you manage your internal and external AI systems? Request a demo: https://fairnow.ai/contact-us/

Both ISO 42001 and the NIST AI RMF share core similarities in purpose and structure. Both are voluntary standards, flexible enough for adoption by organizations operating in any industry or geography.

That flexibility is a double-edged sword: both standards define valuable basic principles, but many readers may need to seek out more guidance from other sources about AI management challenges specific to their industry or maturity level.

Certification vs. Guideline

For many organizations, the most significant distinction between the two frameworks is simple: it’s possible to undergo an audit and receive ISO/IEC 42001 certification, and the NIST AI RMF doesn’t offer that option.

Whether or not having an audit-certified AI management program is worth the extra time and cost will depend on a few factors. If your organization has already received certification for other ISO standards, the process will be more familiar and straightforward.

While ISO certifications are not as common in the United States, they’re extremely recognizable in other markets around the world. For that reason, certification can serve as a major differentiator for companies operating internationally, especially for organizations offering B2B services and software.

Pro Tip: Ask individuals involved in your sales and contracting process what questions about AI risk and governance are coming up. Have potential customers mentioned ISO 42001, or added AI-specific questions to RFPs or procurement surveys? Are they beginning to ask for outside validation of your governance?

Governance vs. System Risk Management

Another factor to consider when weighing the two frameworks is what your organization needs more right now. Are you trying to stand up a comprehensive AI management program, covering not just risks but overall context and strategy? Or are you mostly concerned with managing and mitigating urgent risks from a handful of systems or use cases?

Organizations with more robust governance processes already in place to cover other risks, or those that know that their AI risks are substantial and in need of a full management approach, may be more drawn to the broader outline in ISO 42001. Teams that are looking for risk management options and strategies for a smaller number of higher risk systems, or simply need a place to start, might benefit from NIST’s more flexible, system-level approach.

ISO 42001 places much greater emphasis on the generation of specific documentation, especially at the organization level. Smaller organizations with less-robust governance systems in other domains may find they have to do more “set up” to reach ISO 42001 compliance.

Pro Tip: Reach out to risk, compliance, legal, or other governance partners. Is AI risk management on their radars currently? Are investors or board members asking about it? Are they looking for a process to consider strategy and overall AI investment?

High-Level Requirements vs. System-Level Options

NIST is over three times longer than ISO 42001.

That’s not because the NIST authors were more verbose, or even more demanding. In general, for each goal listed in the Core’s categories and subcategories, NIST aims to provide a wealth of options for organizations to achieve the stated objectives, customized to each system’s needs and risks.

The wide and evolving nature of AI systems means that, necessarily, most options presented will not apply to any single system. For that reason, the NIST AI RMF Playbook is an excellent resource for individual teams looking for specific options to address the challenges of specific systems – an AI risk management buffet.

By contrast, Annex A of ISO/IEC 42001 contains about half as many individual items as NIST’s Core, and its guidance is much more sparing.

In general, ISO’s approach is to lay out what it deems necessary in broad strokes, providing less flexibility from what is in the text but leaving the specifics of implementation up to your organization.

Pro Tip: Determine how much your organization’s needs and culture would benefit from each approach. Would you favor starting with high-level organizational strategy and only then working on system implementation? Or should you build a governance program up from individual systems, with AI developers and risk management teams collaborating on which options are best for each situation?

Pathway Towards The EU AI Act

The EU AI Act takes a regulatory approach, establishing legal requirements for AI systems based on risk categories.

ISO 42001 potentially offers a pathway for organizations to demonstrate compliance with various aspects of the EU AI Act, particularly its requirements for risk management, documentation, and human oversight. While alignment is not perfect—the EU legislation contains more specific requirements for certain high-risk applications—organizations implementing ISO 42001 will build fundamental capabilities that support easier regulatory compliance as the EU AI Act is rolled out over the coming years.

ISO 42001 focuses on AI management systems, but it is only part of a library of AI-related standards released by the International Standards Organization. ISO/IEC 23894 (AI Risk Management) gives specialized guidance on risk management. It complements 42001 by offering deeper guidance on the assessment and mitigation of AI risk. Organizations implementing ISO 42001 often find 23894 a valuable companion for developing more sophisticated risk management practices, especially for more technically complex systems.

Beyond the ISO 42001 Certification: Implications and Ongoing AI System Management

Adopting ISO 42001 as a framework for an organization’s AI management system carries significant strategic implications beyond technical compliance. Organizations that thoughtfully implement the standard can leverage it to achieve broader business objectives and competitive advantages.

ISO 42001 provides a structured pathway for AI governance maturity, enabling organizations to evolve from ad hoc approaches to systematic practices. The standard’s emphasis on continuous improvement encourages progressive enhancement of governance capabilities, allowing organizations to start with focused implementation around high-risk applications before expanding to enterprise-wide coverage. This maturity journey aligns with broader digital transformation initiatives by ensuring that technological innovation occurs within appropriate governance guardrails.

Once a certification is complete, organizations will be expected to continue to operationalize and maintain their AI governance standards and processes. This will ensure continued oversight and trust for customers, stakeholders, and impacted populations.

Pro Tip: Treat Governance as an Ongoing Process

View AI governance not as a one-time project but as an evolving practice. Establish workflows and documentation processes that integrate oversight, testing, and continuous improvement into daily operations.

ISO 42001: A Strategic Imperative for Responsible AI Governance

ISO 42001 marks a significant milestone in the evolution of AI governance, providing organizations with a structured framework to manage the unique challenges of artificial intelligence. As this series has explored, the standard offers comprehensive guidance on establishing governance structures, managing AI-specific risks, ensuring transparency and accountability, and demonstrating responsible practices to stakeholders.

For business executives and professionals working with AI, ISO 42001 represents an opportunity and an imperative. The standard provides a blueprint for building organizational capabilities that will become increasingly crucial as AI applications grow more powerful and pervasive. Implementing robust governance practices now will mean organizations can position themselves for a future where responsible AI management will be a baseline expectation.

The FairNow Advantage for ISO 42001 Certification

FairNow’s comprehensive AI governance solution for ISO 42001 compliance streamlines the entire process, automating the repetitive documentation and audit prep required for ISO 42001 Certification. Our software provides the intelligent guidance and centralized oversight you need to build a system that not only meets the standard but also drives trust and responsible AI innovation.

Explore what an AI governance platform offers you. Learn more: https://fairnow.ai/platform/

The time for establishing robust AI Risk Management practice, anchored by AI compliance software, is now, before regulatory requirements or marketplace expectations make it mandatory.

ISO 42001 key facts at a glance:

What is the ISO AI standard?

ISO 42001 is an international standard for building and maintaining an Artificial Intelligence Management System (AIMS).

Why adopt ISO 42001?

Effective AI governance helps organizations build trust in their AI systems and stay compliant with new global AI regulations. Because the standard is relatively new – it was released in 2023 – ISO 42001 certification is also a way for AI-focused companies to stand out and differentiate themselves on trust.

What do the ISO 42001 controls include?

ISO AI standard requirements focus on an organization-level program as well as specific tasks for each AI system. Requirements include risk management and AI system inventorying, as well as testing, monitoring, incident reporting, and impact assessments.

How does ISO 42001 differ from ISO 27001?

While both ISO standards require organization-level management programs, 27001 focuses on information security while 42001 focuses on AI system management.

How does ISO 42001 differ from the NIST AI RMF?

While there is substantial overlap in both standards – which are focused on governing AI — there are also key differences. NIST is a US-based organization while ISO 42001 is typically considered more global in focus. Only ISO offers an official certification. And from a content perspective, ISO spends more time on organization-level system management while NIST tends to dive deeper in guidance for testing, monitoring, and managing technical aspects for each AI system.

How do I get ISO 42001 certified?

The first step is to purchase a copy of the standard from ISO. You’ll need to complete and evidence AIMS controls in the upfront text as well as in the Annex before moving to the external certification step. Benchmarks suggest this process — end to end — can take up to a year or more. An AI compliance platform can speed up this work significantly and help you successfully prepare for audit.

Keep Learning