What Is AI Governance: A Practical Guide for Risk, Compliance, and Trust in the AI Age

AI systems are being integrated across organizations — in decision-making, operations, and customer-facing tools. The benefits are real. So are the risks.

That’s where AI governance comes in. AI governance is a practical set of processes designed to make sure AI is being used responsibly, stays compliant with law and policy, and can be trusted to work as expected.

Still, AI governance is new. It’s often confused with other disciplines like data governance, MLOps, or Infosec. That confusion can lead to gaps in oversight, or a false sense of confidence.

This guide is meant for teams building or deploying AI — whether you’re early in your journey or starting to formalize your program. It’s written by people who’ve worked in risk, compliance, and AI development, and it lays out what matters and what doesn’t.

What Is AI Governance — and Why Does It Matter?

AI governance refers to the set of policies, processes, roles, and tools that help organizations manage the risks of AI — and ensure its responsible use.

That means:

- Knowing what AI you’re using and how you are using it

- Understanding where the risks are—legal, operational, reputational, or otherwise—and applying appropriate steps to mitgate them

- Making sure systems are tested and monitored appropriately

- Keeping documentation that shows you’re in control

This isn’t just about protecting against something going wrong. Done well, governance also helps teams move faster — by creating clarity about roles, standards, and acceptable use and providing assurance that AI can be used safely.

Why Care about AI Governance Now?

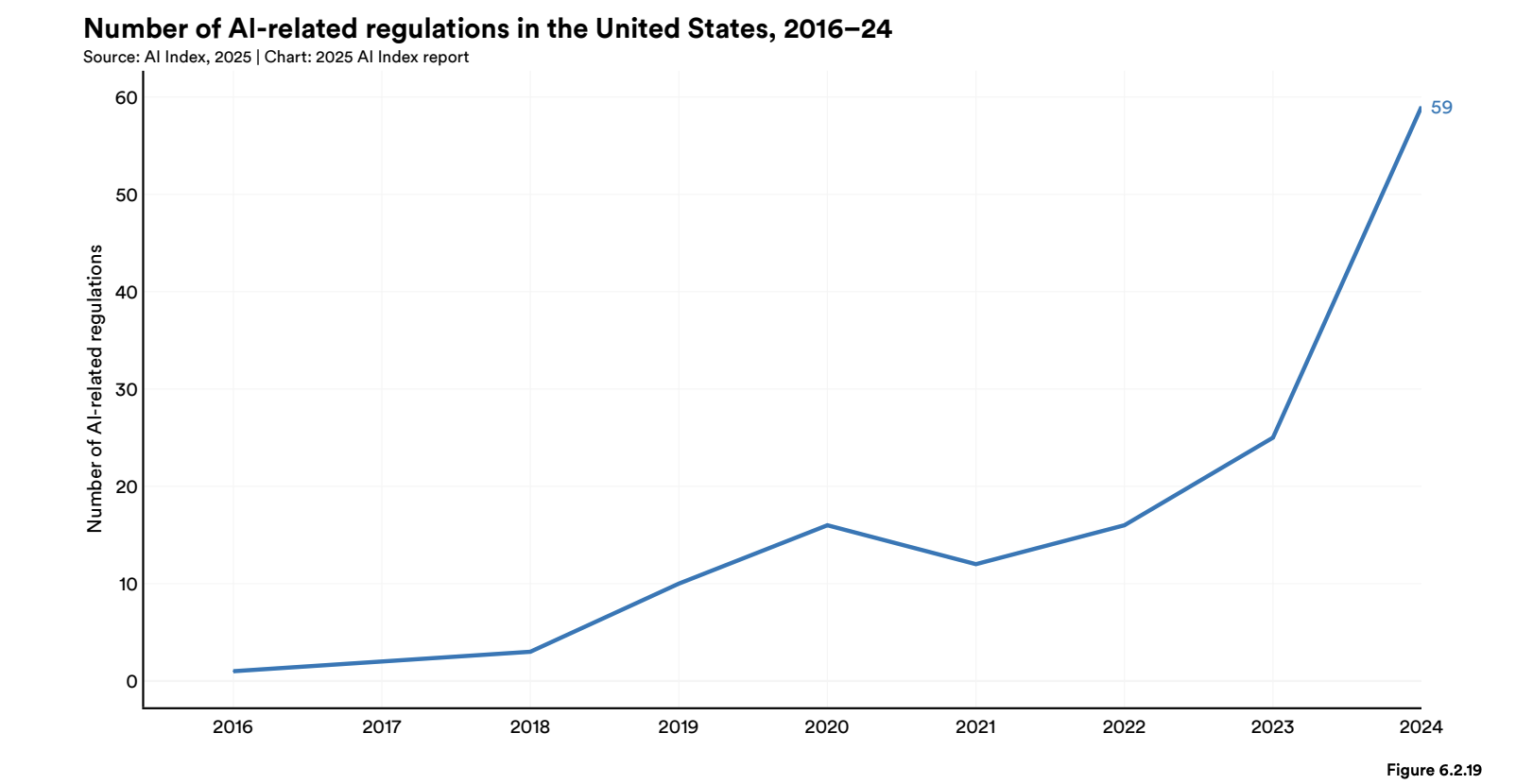

AI regulations are starting to come into effect.

AI-specific laws are being finalized across jurisdictions. The EU AI Act, in particular, creates new requirements for documentation, transparency, and risk management. Many will take effect in 2026. That’s not far off.

Check out the Stanford Human-Center Artificial Intelligence (HAI)’s 2025 AI Index at https://hai.stanford.edu/ai-index/2025-ai-index-report (Source: Page 343)

AI failures can be expensive — and hard to spot.

Models can behave in unexpected ways, especially when built on third-party APIs. Many new AI offerings on the market aren’t transparent. Without oversight, teams may not notice until there’s a headline or a compliance issue.

Customers are skeptical of new AI systems.

Buyers and users want to know AI systems are safe. That’s not something you can prove after the fact. You need systems in place to show how your AI works — and how it’s governed.

What are AI Governance Examples?

AI governance – done well – helps organizations manage risk and compliance obligations for each of their AI systems. Below are some AI governance examples in action and the organizational impacts:

- Prohibiting an inappropriate AI usage: an employee asks to adopt a new tool that uses existing customer data to conduct surveillance on personal activities. The AI Governance Council prohibits this proposal and prevents the organization from using AI for unlawful and potentially damaging activities.

- Monitoring for issues in an existing AI system: an HR technology organization has deployed ongoing bias testing on outcomes of its resume screening tool. Its latest monitoring report suggests that there are significant demographic differences for one of its covered segments. Its developers are notified so that they can recalibrate their model.

- Notifying customers that they’re interacting with AI: an organization using a chatbot for customer service activities generates new language that it uses to inform customers that they are speaking with a chatbot – so their customers aren’t surprised.

- Issue logging and remediation: a deployer of a new AI system conducts performance monitoring and finds that the AI system hallucinates when they ask questions about specific topic areas. They log an issue to the developer through the identified channel to ensure that concerns are addressed.

AI governance processes are designed to help builders, deployers, and impacted populations ensure 1) the AI that they design or use is safe, and 2) monitor so that they can flag ongoing issues and make adjustments over time.

Without AI governance processes in place, organizations run the risk of catching issues with their AI systems only after harms have already been done.

Who Is Responsible for AI Governance? Who Should be Involved?

AI governance is inherently cross-functional. But without a clear owner, it often gets lost between teams.

Most organizations appoint a dedicated AI governance lead who often sits in risk, data, engineering, or compliance. This leader will frequently have experience in similar governance domains like infosec or data privacy.

An AI governance lead, sometimes called the Head of AI Governance, is responsible for several activities:

- Setting the governance agenda at the enterprise level

- Defining the policies and procedures required to implement the organization’s AI governance agenda

- Coordinating stakeholders across different teams, e.g., line of business, data science, legal, data privacy, infosec

- Tracking AI risks and monitoring their resolution across a portfolio of vendor and internally developed AI

- Managing the organization’s alignment with key AI governance standards like NIST AI RMF or ISO 42001

- Assessing the effectiveness of the organization’s AI governance, and making improvements as needed

Without clear leadership and defined accountability, AI governance efforts can become fragmented or reactive, leading to inconsistent risk oversight and compliance gaps.

What Does Good AI Governance Look Like?

There’s no single checklist for AI governance. But there are a few activities that show up in nearly every well-run program.

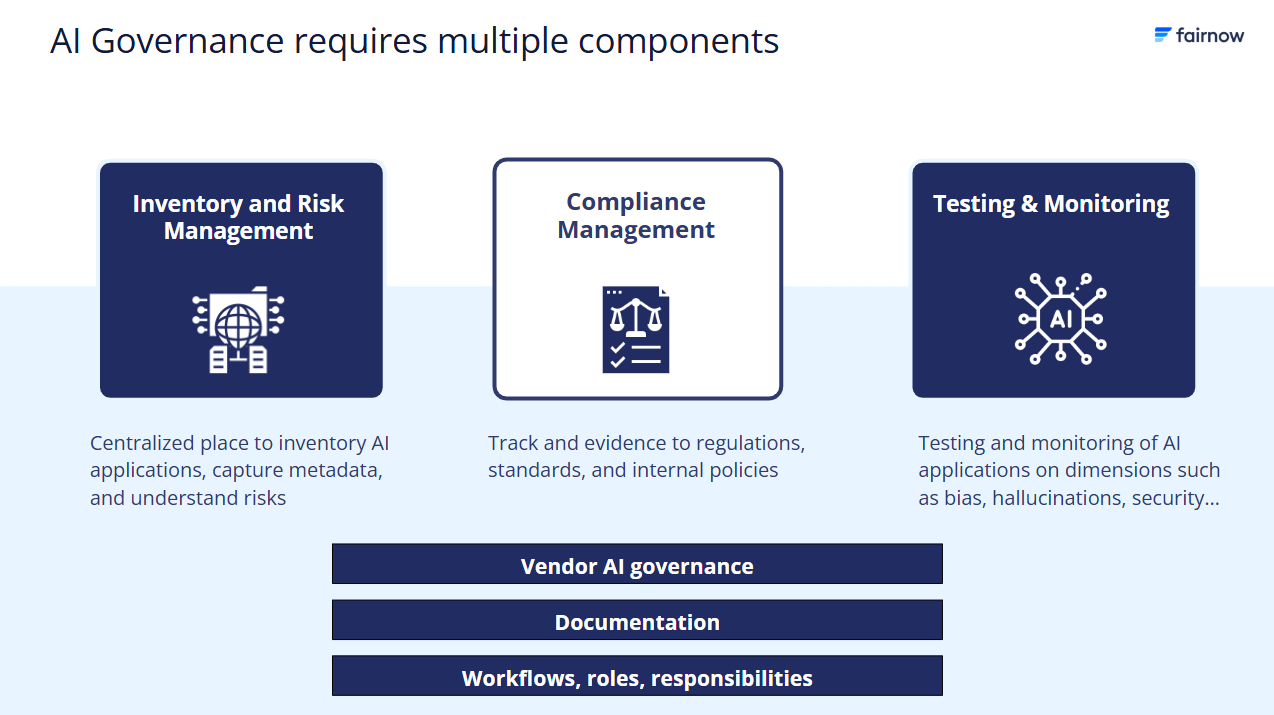

1. AI Inventory

A current list of all AI systems — including those built in-house and those from vendors.

At a minimum, this should track:

- What the system does and what benefits it provides for the organization

- Who is accountable for which parts of the system’s governance

- Whether it’s high-risk (by legal or internal standards)

- Key details (e.g. inputs, outputs, vendors, model type) that explain what the AI does and what the risks are

Without an inventory, you can’t assess exposure or demonstrate control.

2. AI Compliance Management

This has two main parts.

First it means understanding what governance requirements you must follow. These can come either from the law (e.g. the EU AI Act, NYC LL144) or from internal standards (like ISO 42001, NIST AI RMF or your organization’s policies).

Then comes the work of actually getting compliant. Governance programs should translate these requirements into real workflows — so that compliance isn’t just a policy, it’s something you can prove.

3. System Monitoring

AI systems don’t just fail at deployment. They change over time — especially if the data shifts or the context evolves.

Good governance means:

- Testing models before they go live (for performance, bias, explainability, etc.)

- Setting up post-deployment monitoring to catch issues and react in time

- Documenting thresholds, alerts, and incident handling

4. Transparency Documentation

This is what lets you answer regulators, auditors, customers, or leadership when they ask:

“How does this work?” and “How do you know it’s working safely?”

It includes:

- Risk assessments

- Model documentation (e.g. model cards)

- Logs of decisions and updates

- Signoffs and approvals

Documentation isn’t just about compliance. It helps teams understand and revisit decisions over time.

5. Vendor AI Governance

If you’re using AI from third parties — you can still be held responsible for what happens.

You need:

- A process for vetting AI vendors and use cases

- Requirements in contracts (around documentation, testing, and disclosures)

- Monitoring of how the tool is used internally

Many regulations will hold the deployer accountable — not just the developer.

How AI Governance Differs from Related Domains

It’s common to assume that existing risk, data, or engineering structures already cover AI governance. In reality, those systems often miss the specific risks AI introduces. Here’s how AI governance overlaps — and importantly, differs — from the three most commonly confused domains.

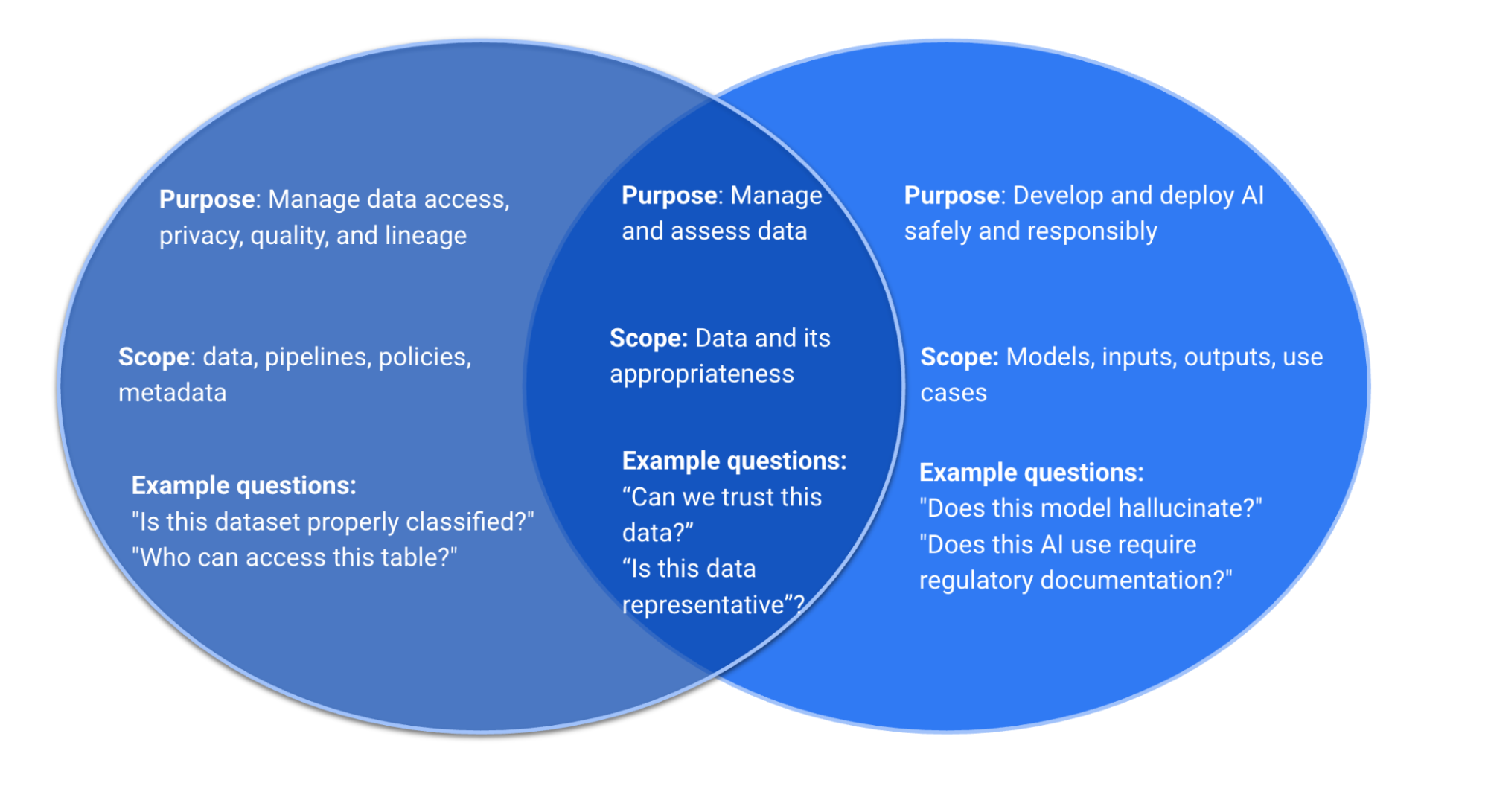

Comparing AI Governance and Data Governance

What’s the Difference Between AI Governance and Data Governance?

Both AI governance and data governance are essential and deeply interconnected for trustworthy AI systems. However, while these domains overlap, there are significant differences.

Data governance focuses specifically on data – including lineage, access controls and classification, privacy, and consent. It ensures that personal and sensitive data is secure, fit for purpose, and used in alignment with consent. Core regulation on data governance includes GDPR.

AI governance is focused on the trustworthiness and reliability of AI systems based on usage. It ensures that AI systems operate as intended (for example, they don’t hallucinate), that they don’t perpetuate bias, and that users know they are interacting with AI. Regulations on AI governance include the EU AI Act.

Bad data – or data that is unprotected or used inappropriately – is crucial to investigate as part of AI governance activities. But even if all data governance concerns are managed, there is still additional work involved in managing the risk of AI.

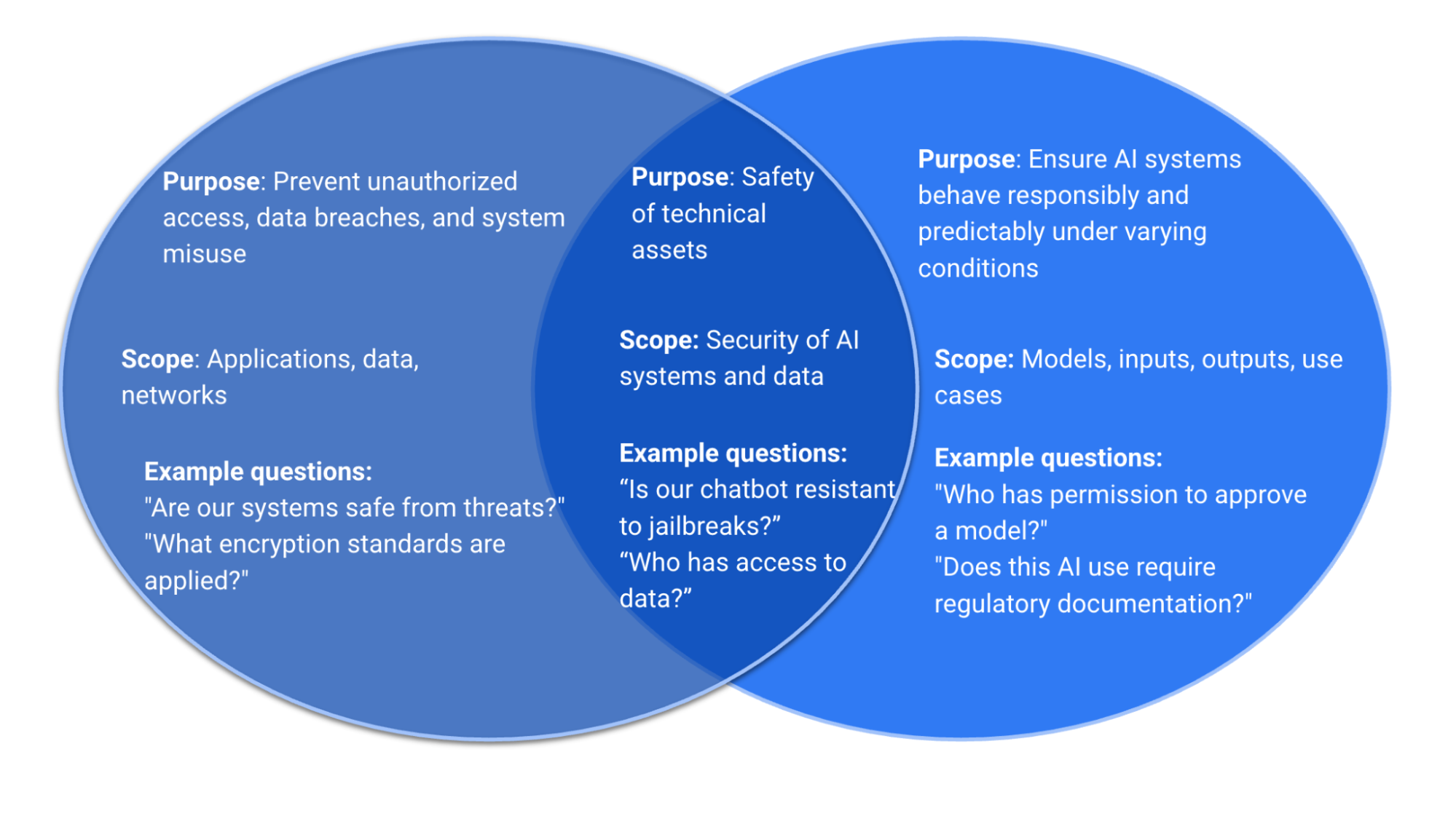

Comparing AI Governance and Information Security (InfoSec)

What’s the Difference Between AI Governance and Infosec?

Infosec protects infrastructure. AI governance protects models that may be secure (in the cybersecurity sense), but still unsafe in terms of how they treat users, replicate bias, or breach regulation.

A model can be perfectly protected and still be unethical or illegal in how it operates.

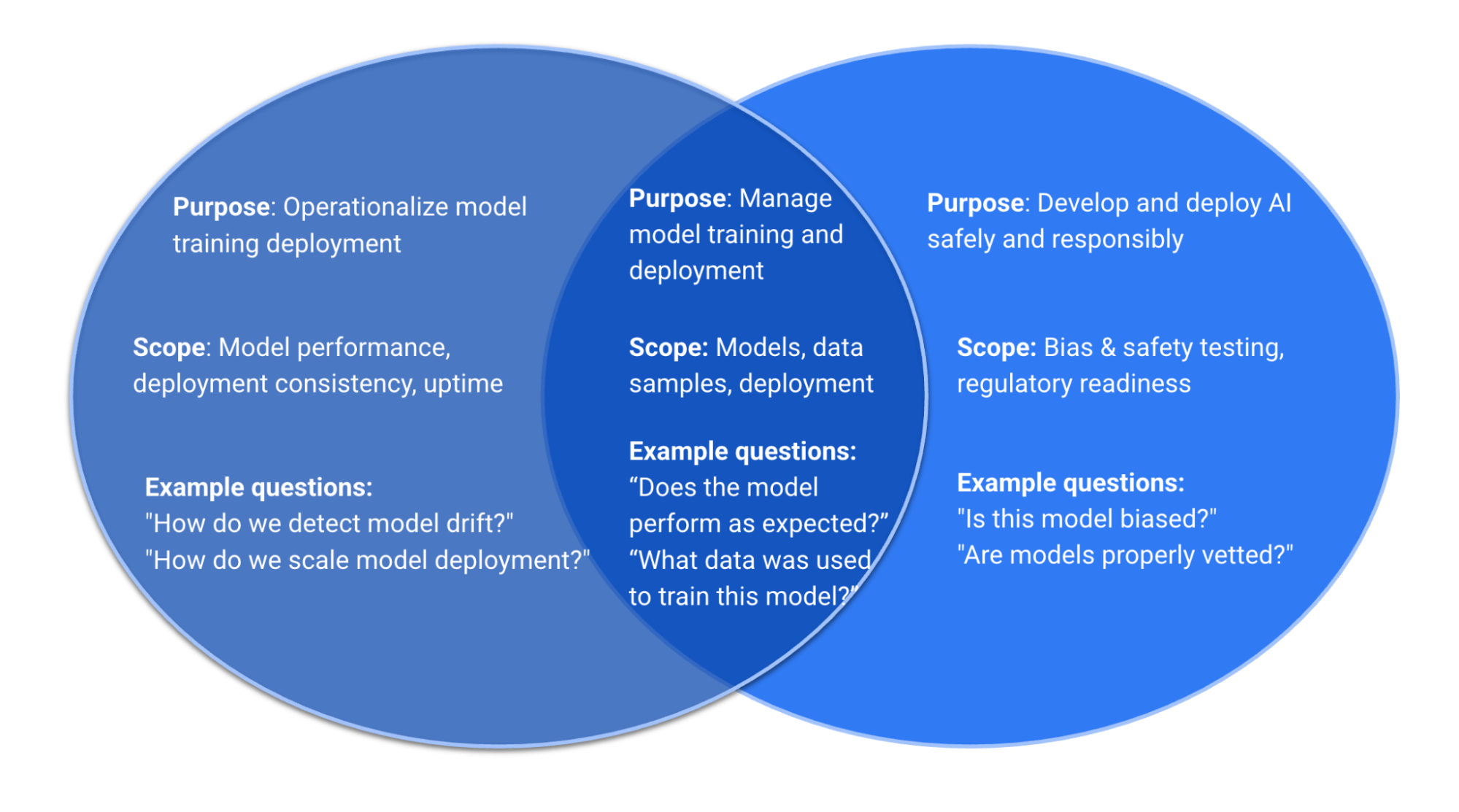

Comparing AI Governance and Machine Learning Ops (MLOps)

What’s the Difference Between AI Governance and MLOps?

MLOps is about building and shipping models. AI governance is about ensuring those models are fit for purpose, legal, and accountable. Most MLOps platforms aren’t equipped to assess regulatory exposure or document human oversight.

How to Match AI Governance Features to Your Organization’s Needs

Choosing the right AI governance tools isn’t one-size-fits-all. It depends on your specific context. Focus on these four main factors to identify what capabilities matter most:

1. AI Use Case Complexity

- Internal tools / low risk: Basic inventory and policy alignment are enough.

- Customer-facing or sensitive: Add monitoring, transparency, and documentation.

- Highly regulated or high impact (e.g., hiring, credit): Full risk assessments, compliance workflows, and audit support are essential.

2. Regulatory Environment

- Heavy regulation (e.g., EU AI Act, healthcare, finance): You need platforms that map controls directly to laws and support conformity assessments.

- Lighter or evolving regulation: Focus on flexible risk frameworks and policy enforcement tools.

3. Organizational Scale & Maturity

- Early-stage / small AI footprint: Tools with templates and guided onboarding to help build processes.

- Growing / multi-team setups: Workflow management, role-based access, and integration with existing systems matter.

- Large / global enterprises: Require advanced compliance tracking, evidence management, and audit reporting capabilities.

4. Source of AI Systems

- Mostly in-house: Emphasize testing, lifecycle monitoring, and developer collaboration.

- Mostly vendor-provided: Focus on vendor risk assessments, contract compliance, and usage monitoring.

- Mixed environments: Look for platforms flexible enough to handle both.

Getting Started With AI Governance—Simplified in 5 Recommendations

You don’t need to solve everything on day one. Start with structure, and grow from there.

- Build a basic AI inventory that tracks sufficient metadata to properly assess the risk profile and benefits of each AI use case

- Appoint a governance lead (with backing from leadership) who is responsible for setting an organization’s AI governance agenda and the policies and procedures implementing it

- Define a few initial policies: acceptable use, high-risk criteria, review points and criteria

- Pilot a simple tool – even if it’s just a spreadsheet or Jira board to start

- Train key teams on what AI governance means, and what role they play

Explore what an AI governance platform offers you. Learn more: https://fairnow.ai/platform/

AI Governance FAQs

What is AI governance?

AI governance is the set of policies, processes, and controls that help an organization manage the risks and responsibilities that come with developing and using AI.

It covers things like who is accountable, how AI systems are monitored, and how to make sure they stay fair, safe, and compliant with relevant laws and standards. Good AI governance helps build trust and keeps AI use aligned with your business goals and values.

Why is AI governance critical now?

AI is becoming more capable and more common in everyday life, which makes it more important than ever to apply proper oversight. New laws like the EU AI Act and Colorado AI Act are making good AI governance mandatory — but it’s not just about compliance. Stakeholders and customers increasingly expect companies to manage AI responsibly and keep it fair, safe, and trustworthy.

What core principles guide AI governance?

Most AI governance frameworks revolve around a few core principles that help organizations use AI responsibly and earn trust.

- Risk management means spotting and reducing potential harms that AI could cause, from safety issues to unfair outcomes.

- Accountability keeps real people responsible for how AI is developed and used, so no one can blame the “black box.”

- Transparency ensures people understand when AI is used, what it does, how it works, and what data it relies on.

- Human oversight is about making sure humans stay in control and can intervene when needed, especially for critical decisions.

- And fairness focuses on testing and monitoring AI for bias or discrimination, so outcomes stay equitable.

Together, these principles help organizations align AI with laws, standards, and public expectations.

What does an effective AI governance structure look like?

An effective AI governance structure lays out clear roles, responsibilities, and processes to make sure AI is used safely and responsibly.

It often includes things like:

- Clear policies that set expectations for how AI should be developed and used.

- Defined roles for who is accountable for different parts of the AI lifecycle.

- Risk management processes to assess and address potential issues like bias or misuse.

- Ongoing monitoring and oversight to catch problems early and keep systems working as intended.

The goal is to balance innovation with safeguards so AI adds value without causing unintended harm.

How should governance scale across different AI projects?

The level of governance provided should scale based on the risk and complexity of each project. Not every system needs the same level of oversight.

Low-risk, low-impact projects might just need basic documentation and simple checks. Higher-risk systems, like AI used in hiring, lending, or healthcare, usually require stricter controls, more detailed testing, and stronger human oversight.

A good approach is to use a risk-based framework that helps teams apply the right level of governance for each use case, so safeguards grow with the potential impact.

How do organizations identify and manage AI risks?

Organizations typically identify and manage AI risks through a structured, ongoing process.

They start by assessing how the AI will be used, what data it relies on, and what potential impacts it could have: for example, unfair bias, security gaps, or data privacy issues.

From there, they take steps to mitigate and monitor those risks. This can include things like bias testing, human review, clear documentation, and monitoring systems after they’re deployed. Documentation of the entire process is critical.

The goal is to catch problems early and make sure the AI stays reliable, fair, and compliant over time.

What ethical safeguards are required?

Ethical safeguards help ensure AI is used responsibly and doesn’t cause unintended harm.

Common safeguards include:

- Human oversight for important decisions.

- Testing for bias and safety risks – both before and after the AI is deployed.

- Transparency about how the AI works and what data it uses.

- Clear accountability so people know who’s responsible if something goes wrong.

Some safeguards may also be required by law or industry standards, especially for high-risk uses like hiring or lending.

How do we build trustworthy or responsible AI?

Building trustworthy or responsible AI means designing systems that people can rely on to work safely and fairly.

Key steps include:

- Using high-quality, representative data to reduce bias.

- Testing models for accuracy, fairness, and safety.

- Keeping humans involved in important decisions.

- Being transparent about how the AI works and what its limits are.

- Monitoring AI performance over time and fixing issues quickly.

The goal is to balance innovation with safeguards so AI stays aligned with human values and legal requirements.

How to start implementing AI governance?

Begin by setting basic policies for how AI should be developed, used, and monitored. Identify who’s responsible for different parts of the AI lifecycle.

Then, inventory your AI systems, assess which ones pose higher risks or are in scope for different laws, and work towards documentation, testing, and oversight where they’re needed most.

It helps to start small and improve over time, making governance part of how teams build and use AI every day.

When should governance efforts ramp up?

A good rule of thumb is to tie governance levels to risk: the higher the stakes, the more robust your checks and controls should be.

For AI systems, governance should correspond to the risks and impact. For example, simple chatbots or internal tools may need light oversight. But AI that impacts people’s jobs, health or finances may require more stringent oversight.

Do I need AI governance?

If your organization uses AI in ways that impact people or critical decisions, you probably need some level of AI governance.

It helps you manage risks, stay compliant with laws and standards, and build trust with customers and stakeholders. Even if you’re not legally required to have it yet, good AI governance can catch problems early, prevent costly mistakes, and show you’re acting responsibly.

What’s the difference between AI ethics and AI governance?

AI ethics is about the principles and values that guide how AI should be designed and used, like fairness, transparency, and accountability. AI governance describes how those principles are put into practice. It’s defined by the processes, policies, and tools you use to manage risks, ensure oversight, and meet compliance requirements.

In short, AI ethics typically captures the “why” and governance is the “how”.

What is an AI audit?

An AI audit is an independent check to see if an AI system is working as it should.

It looks at dimensions like accuracy, bias, fairness, or whether the system follows relevant laws, policies, and ethical guidelines. The goal is to find risks or issues, show that you’re managing them properly, and build trust with regulators, customers, and other stakeholders. And because it’s done independently, it avoids conflicts of interest.

Who’s liable when AI makes a mistake?

Liability can fall on either the developer (builder) or deployer (user), or both, depending on the situation and the laws that apply.

Developers may be held responsible if there are flaws in how the AI was built, like biased training data or poor testing. Deployers (users) are usually responsible for how they use the AI in practice, including following instructions, monitoring performance, and making sure there’s proper human oversight. Clear contracts and good governance help both sides manage these risks.

How often should we reassess AI risk?

AI risks should be reassessed regularly. It’s not a one-and-done exercise before you launch the AI.

Best practice is to review risk at any of the following milestones:

- Before deploying

- Before major updates or retraining

- When the AI is used in a new context or by a new group

- On a fixed schedule (e.g. annually)

- If there’s evidence of harm, complaints, or performance issues

Keep Learning